This is a repost. I convert the original PDF into a web format for easier accessibility and translation.

- Original document: Gemini: A Family Of Highly Capable Multimodal Models.pdf

- Chinese Translation: 双子座: 一组功能强大的多模态模型

Keywords:

Gemini: A Family Of Highly Capable Multimodal Models, Gemini, Google, paper, LLM, AI, fulltext, web, html, pdf, markdown, translation, translate, Chinese, multimodal, model

谷歌,双子座,技术报告,论文,全文,网页版,翻译,多模态,模型,中文,汉语

Gemini Team, Google¹

This report introduces a new family of multimodal models, Gemini, that exhibit remarkable capabilities across image, audio, video, and text understanding. The Gemini family consists of Ultra, Pro, and Nano sizes, suitable for applications ranging from complex reasoning tasks to on-device memory-constrained use-cases. Evaluation on a broad range of benchmarks shows that our most-capable Gemini Ultra model advances the state of the art in 30 of 32 of these benchmarks - notably being the first model to achieve human-expert performance on the well-studied exam benchmark MMLU, and improving the state of the art in every one of the 20 multimodal benchmarks we examined. We believe that the new capabilities of Gemini models in cross-modal reasoning and language understanding will enable a wide variety of use cases and we discuss our approach toward deploying them responsibly to users.

1. Introduction

We present Gemini, a family of highly capable multimodal models developed at Google. We trained Gemini jointly across image, audio, video, and text data for the purpose of building a model with both strong generalist capabilities across modalities alongside cutting-edge understanding and reasoning performance in each respective domain.

Gemini 1.0, our first version, comes in three sizes: Ultra for highly-complex tasks, Pro for enhanced performance and deployability at scale, and Nano for on-device applications. Each size is specifically tailored to address different computational limitations and application requirements. We evaluate the performance of Gemini models on a comprehensive suite of internal and external benchmarks covering a wide range of language, coding, reasoning, and multimodal tasks.

Gemini advances state-of-the-art in large-scale language modeling (Anil et al., 2023; Brown et al., 2020; Chowdhery et al., 2023; Hoffmann et al., 2022; OpenAI, 2023a; Radford et al., 2019; Rae et al., 2021), image understanding (Alayrac et al., 2022; Chen et al., 2022; Dosovitskiy et al., 2020; OpenAI, 2023b; Reed et al., 2022; Yu et al., 2022a), audio processing (Radford et al., 2023; Zhang et al., 2023), and video understanding (Alayrac et al., 2022; Chen et al., 2023). It also builds on the work on sequence models (Sutskever et al., 2014), a long history of work in deep learning based on neural networks (LeCun et al., 2015), and machine learning distributed systems (Barham et al., 2022; Bradbury et al., 2018; Dean et al., 2012) that enable large-scale training.

Our most capable model, Gemini Ultra, achieves new state-of-the-art results in 30 of 32 benchmarks we report on, including 10 of 12 popular text and reasoning benchmarks, 9 of 9 image understanding benchmarks, 6 of 6 video understanding benchmarks, and 5 of 5 speech recognition and speech translation benchmarks. Gemini Ultra is the first model to achieve human-expert performance on MMLU (Hendrycks et al., 2021a) - a prominent benchmark testing knowledge and reasoning via a suite of exams - with a score above 90%. Beyond text, Gemini Ultra makes notable advances on challenging multimodal reasoning tasks. For example, on the recent MMMU benchmark (Yue et al., 2023), that comprises questions about images on multi-discipline tasks requiring college-level subject knowledge and deliberate reasoning, Gemini Ultra achieves a new state-of-the-art score of 62.4%, outperforming the previous best model by more than 5 percentage points. It provides a uniform performance lift for video question answering and audio understanding benchmarks.

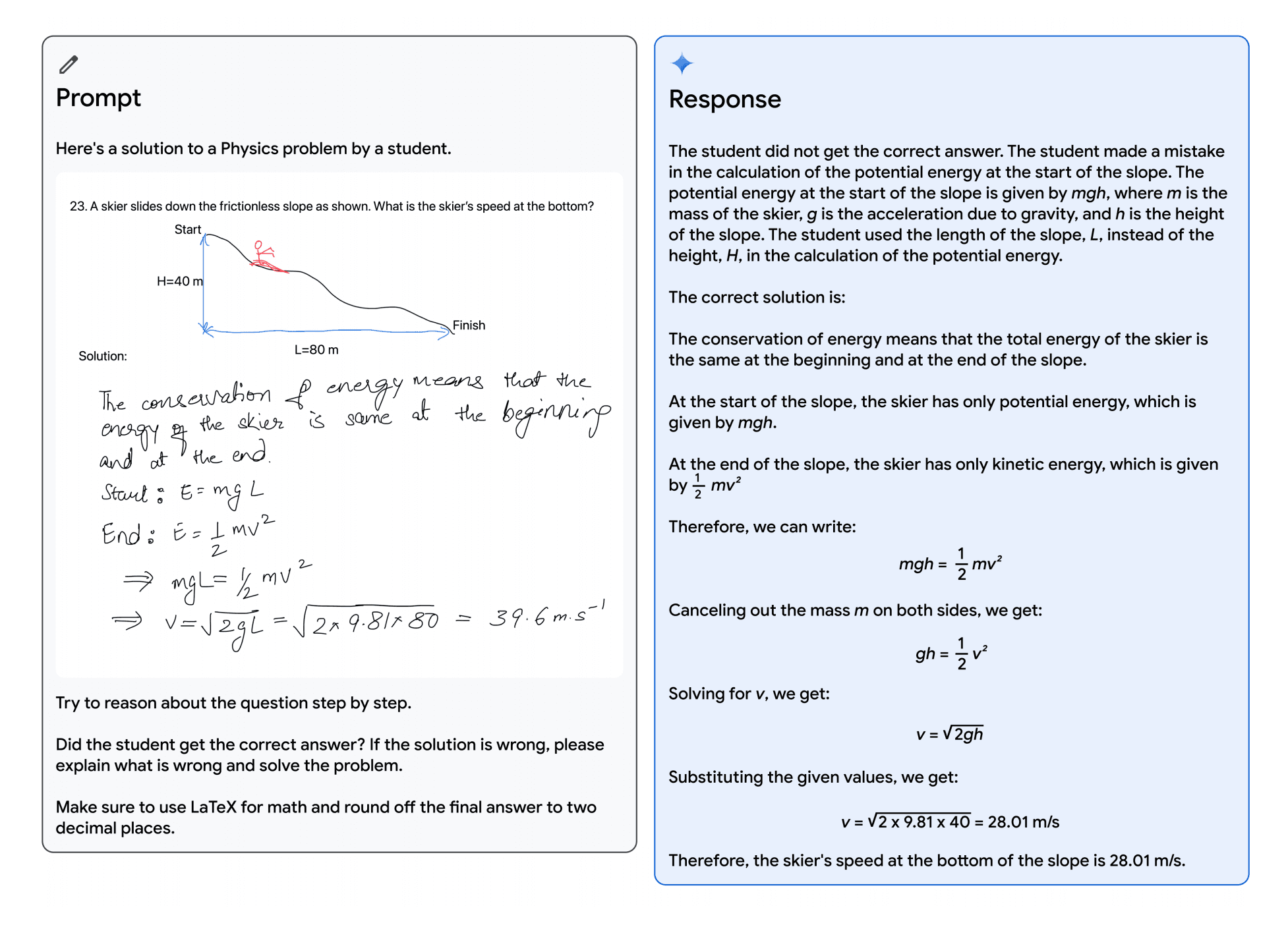

Qualitative evaluation showcases impressive crossmodal reasoning capabilities, enabling the model to understand and reason across an input sequence of audio, images, and text natively (see Figure 5 and Table 13). Consider the educational setting depicted in Figure 1 as an example. A teacher has drawn a physics problem of a skier going down a slope, and a student has worked through a solution to it. Using Gemini's multimodal reasoning capabilities, the model is able to understand the messy handwriting, correctly understand the problem formulation, convert both the problem and solution to mathematical typesetting, identify the specific step of reasoning where the student went wrong in solving the problem, and then give a worked through correct solution to the problem. This opens up exciting educational possibilities, and we believe the new multimodal and reasoning capabilities of Gemini models have dramatic applications across many fields.

Figure 1 | Verifying a student's solution to a physics problem. The model is able to correctly recognize all of the handwritten content and verify the reasoning. On top of understanding the text in the image, it needs to understand the problem setup and correctly follow instructions to generate LATEX.

The reasoning capabilities of large language models show promise toward building generalist agents that can tackle more complex multi-step problems. The AlphaCode team built AlphaCode 2 (Leblond et al, 2023), a new Gemini-powered agent, that combines Gemini's reasoning capabilities with search and tool-use to excel at solving competitive programming problems. AlphaCode 2 ranks within the top 15% of entrants on the Codeforces competitive programming platform, a large improvement over its state-of-the-art predecessor in the top 50% (Li et al., 2022).

In tandem, we advance the frontier of efficiency with Gemini Nano, a series of small models targeting on-device deployment. These models excel in on-device tasks, such as summarization, reading comprehension, text completion tasks, and exhibit impressive capabilities in reasoning, STEM, coding, multimodal, and multilingual tasks relative to their sizes.

In the following sections, we first provide an overview of the model architecture, training infrastructure, and training dataset. We then present detailed evaluations of the Gemini model family, covering well-studied benchmarks and human-preference evaluations across text, code, image, audio and video - which include both English performance and multilingual capabilities. We also discuss our approach to responsible deployment,² including our process for impact assessments, developing model policies, evaluations, and mitigations of harm before deployment decisions. Finally, we discuss the broader implications of Gemini, its limitations alongside its potential applications - paving the way for a new era of research and innovation in AI.

2. Model Architecture

Gemini models build on top of Transformer decoders (Vaswani et al., 2017) that are enhanced with improvements in architecture and model optimization to enable stable training at scale and optimized inference on Google's Tensor Processing Units. They are trained to support 32k context length, employing efficient attention mechanisms (for e.g. multi-query attention (Shazeer, 2019)). Our first version, Gemini 1.0, comprises three main sizes to support a wide range of applications as discussed in Table 1.

| Model size | Model description |

|---|---|

| Ultra | Our most capable model that delivers state-of-the-art performance across a wide range of highly complex tasks, including reasoning and multimodal tasks. It is efficiently serveable at scale on TPU accelerators due to the Gemini architecture. |

| Pro | A performance-optimized model in terms of cost as well as latency that delivers significant performance across a wide range of tasks. This model exhibits strong reasoning performance and broad multimodal capabilities. |

| Nano | Our most efficient model, designed to run on-device. We trained two versions of Nano, with 1.8B (Nano-1) and 3.25B (Nano-2) parameters, targeting low and high memory devices respectively. It is trained by distilling from larger Gemini models. It is 4-bit quantized for deployment and provides best-in-class performance. |

Table 1 | An overview of the Gemini 1.0 model family.

Gemini models are trained to accommodate textual input interleaved with a wide variety of audio and visual inputs, such as natural images, charts, screenshots, PDFs, and videos, and they can produce text and image outputs (see Figure 2). The visual encoding of Gemini models is inspired by our own foundational work on Flamingo (Alayrac et al., 2022), CoCa (Yu et al., 2022a), and PaLI (Chen et al., 2022), with the important distinction that the models are multimodal from the beginning and can natively output images using discrete image tokens (Ramesh et al., 2021; Yu et al., 2022b).

Figure 2 | Gemini supports interleaved sequences of text, image, audio, and video as inputs (illustrated by tokens of different colors in the input sequence). It can output responses with interleaved image and text.

Video understanding is accomplished by encoding the video as a sequence of frames in the large context window. Video frames or images can be interleaved naturally with text or audio as part of the model input. The models can handle variable input resolution in order to spend more compute on tasks that require fine-grained understanding. In addition, Gemini can directly ingest audio signals at 16kHz from Universal Speech Model (USM) (Zhang et al., 2023) features. This enables the model to capture nuances that are typically lost when the audio is naively mapped to a text input (for example, see audio understanding demo on the website).

Training the Gemini family of models required innovations in training algorithms, dataset, and infrastructure. For the Pro model, the inherent scalability of our infrastructure and learning algorithms enable us to complete pretraining in a matter of weeks, leveraging a fraction of the Ultra's resources. The Nano series of models leverage additional advancements in distillation and training algorithms to produce the best-in-class small language models for a wide variety of tasks, such as summarization and reading comprehension, which power our next generation on-device experiences.

3. Training Infrastructure

We trained Gemini models using TPUv5e and TPUv4 (Jouppi et al., 2023), depending on their sizes and configuration. Training Gemini Ultra used a large fleet of TPUv4 accelerators across multiple datacenters. This represents a significant increase in scale over our prior flagship model PaLM-2 which presented new infrastructure challenges. Scaling up the number of accelerators results in a proportionate decrease in the mean time between failure of hardware in the overall system. We minimized the rate of planned reschedules and preemptions, but genuine machine failures are commonplace across all hardware accelerators at such large scales, due to external factors such as cosmic rays (Michalak et al., 2012).

TPUv4 accelerators are deployed in "SuperPods" of 4096 chips, each connected to a dedicated optical switch, which can dynamically reconfigure 4x4x4 chip cubes into arbitrary 3D torus topologies in around 10 seconds (Jouppi et al., 2023). For Gemini Ultra, we decided to retain a small number of cubes per superpod to allow for hot standbys and rolling maintenance.

TPU accelerators primarily communicate over the high speed inter-chip-interconnect, but at Gemini Ultra scale, we combine SuperPods in multiple datacenters using Google's intra-cluster and inter-cluster network (Poutievski et al., 2022; Wetherall et al., 2023; yao Hong et al., 2018). Google's network latencies and bandwidths are sufficient to support the commonly used synchronous training paradigm, exploiting model parallelism within superpods and data-parallelism across superpods.

The 'single controller' programming model of Jax (Bradbury et al., 2018) and Pathways (Barham et al., 2022) allows a single Python process to orchestrate the entire training run, dramatically simplifying the development workflow. The GSPMD partitioner (Xu et al., 2021) in the XLA compiler partitions the training step computation, and the MegaScale XLA compiler (XLA, 2019) pass statically schedules appropriate collectives so that they maximally overlap with the computation with very little variation in step time.

Maintaining a high goodput³ at this scale would have been impossible using the conventional approach of periodic checkpointing of weights to persistent cluster storage. For Gemini, we instead made use of redundant in-memory copies of the model state, and on any unplanned hardware failures, we rapidly recover directly from an intact model replica. Compared to both PaLM and PaLM-2 (Anil et al., 2023), this provided a substantial speedup in recovery time, despite the significantly larger training resources being used. As a result, the overall goodput for the largest-scale training job increased from 85% to 97%.

Training at unprecedented scale invariably surfaces new and interesting systems failure modes - and in this instance one of the problems that we needed to address was that of "Silent Data Corruption (SDC)" (Dixit et al., 2021; Hochschild et al., 2021; Vishwanathan et al., 2015). Although these are extremely rare, the scale of Gemini means that we can expect SDC events to impact training every week or two. Rapidly detecting and removing faulty hardware required several new techniques that exploit deterministic replay to isolate incorrect computations, combined with proactive SDC scanners on idle machines and hot standbys. Our fully deterministic infrastructure allowed us to quickly identify root causes (including hardware failures) during the development leading up to the Ultra model, and this was a crucial ingredient towards stable training.

4. Training Dataset

Gemini models are trained on a dataset that is both multimodal and multilingual. Our pretraining dataset uses data from web documents, books, and code, and includes image, audio, and video data.

We use the SentencePiece tokenizer (Kudo and Richardson, 2018) and find that training the tokenizer on a large sample of the entire training corpus improves the inferred vocabulary and subsequently improves model performance. For example, we find Gemini models can efficiently tokenize non-Latin scripts which can, in turn, benefit model quality as well as training and inference speed.

The number of tokens used to train the largest models were determined following the approach in Hoffmann et al. (2022). The smaller models are trained for significantly more tokens to improve performance for a given inference budget, similar to the approach advocated in Touvron et al. (2023a).

We apply quality filters to all datasets, using both heuristic rules and model-based classifiers. We also perform safety filtering to remove harmful content. We filter our evaluation sets from our training corpus. The final data mixtures and weights were determined through ablations on smaller models. We stage training to alter the mixture composition during training - increasing the weight of domain-relevant data towards the end of training. We find that data quality is critical to a highlyperforming model, and believe that many interesting questions remain around finding the optimal dataset distribution for pretraining.

5. Evaluation

The Gemini models are natively multimodal, as they are trained jointly across text, image, audio, and video. One open question is whether this joint training can result in a model which has strong capabilities in each domain - even when compared to models and approaches that are narrowly tailored to single domains. We find this to be the case: Gemini sets a new state of the art across a wide range of text, image, audio, and video benchmarks.

5.1. Text

5.1.1. Academic Benchmarks

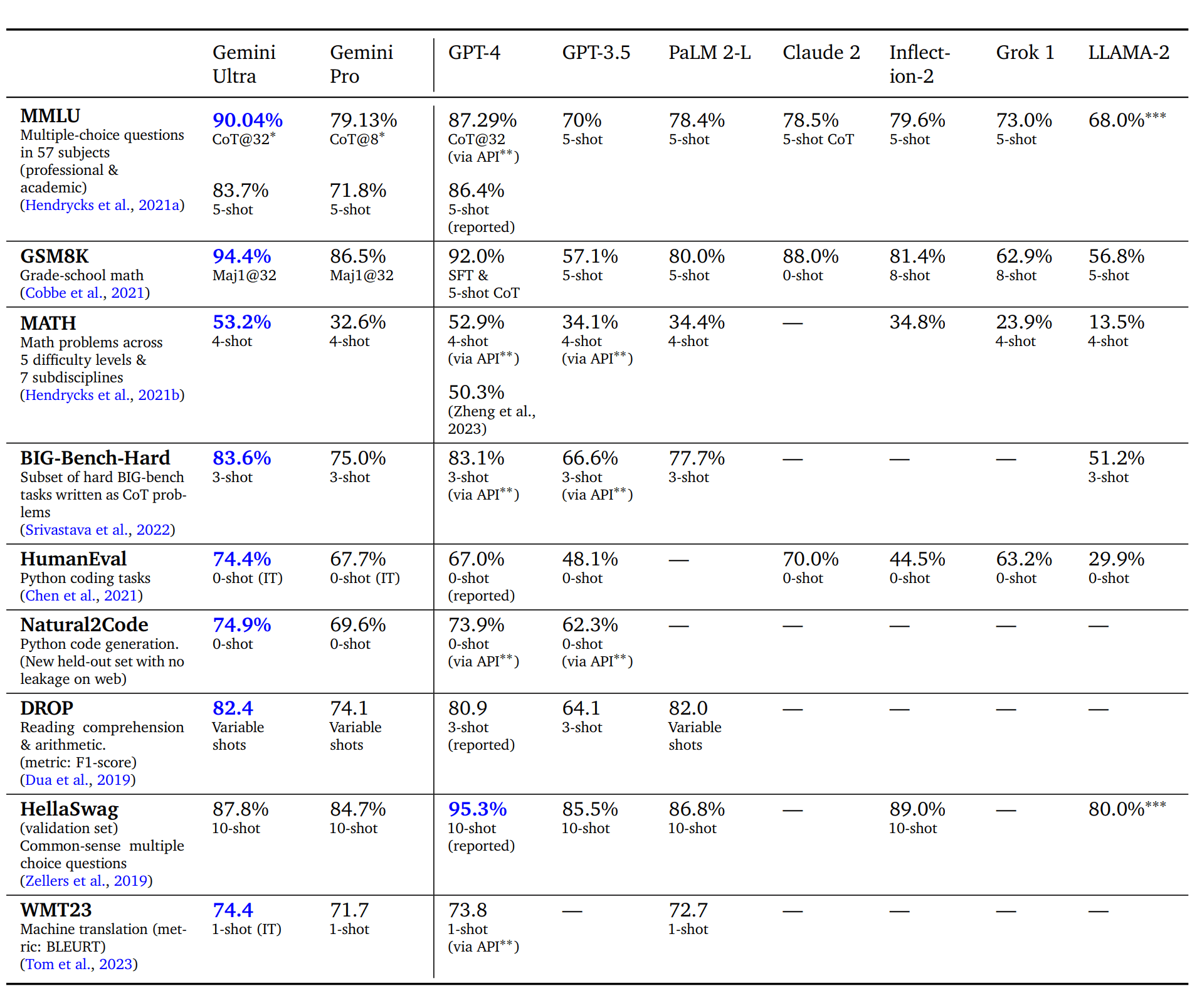

We compare Gemini Pro and Ultra to a suite of external LLMs and our previous best model PaLM 2 across a series of text-based academic benchmarks covering reasoning, reading comprehension, STEM, and coding. We report these results in Table 2. Broadly, we find that the performance of Gemini Pro outperforms inference-optimized models such as GPT-3.5 and performs comparably with several of the most capable models available, and Gemini Ultra outperforms all current models. In this section, we examine some of these findings.

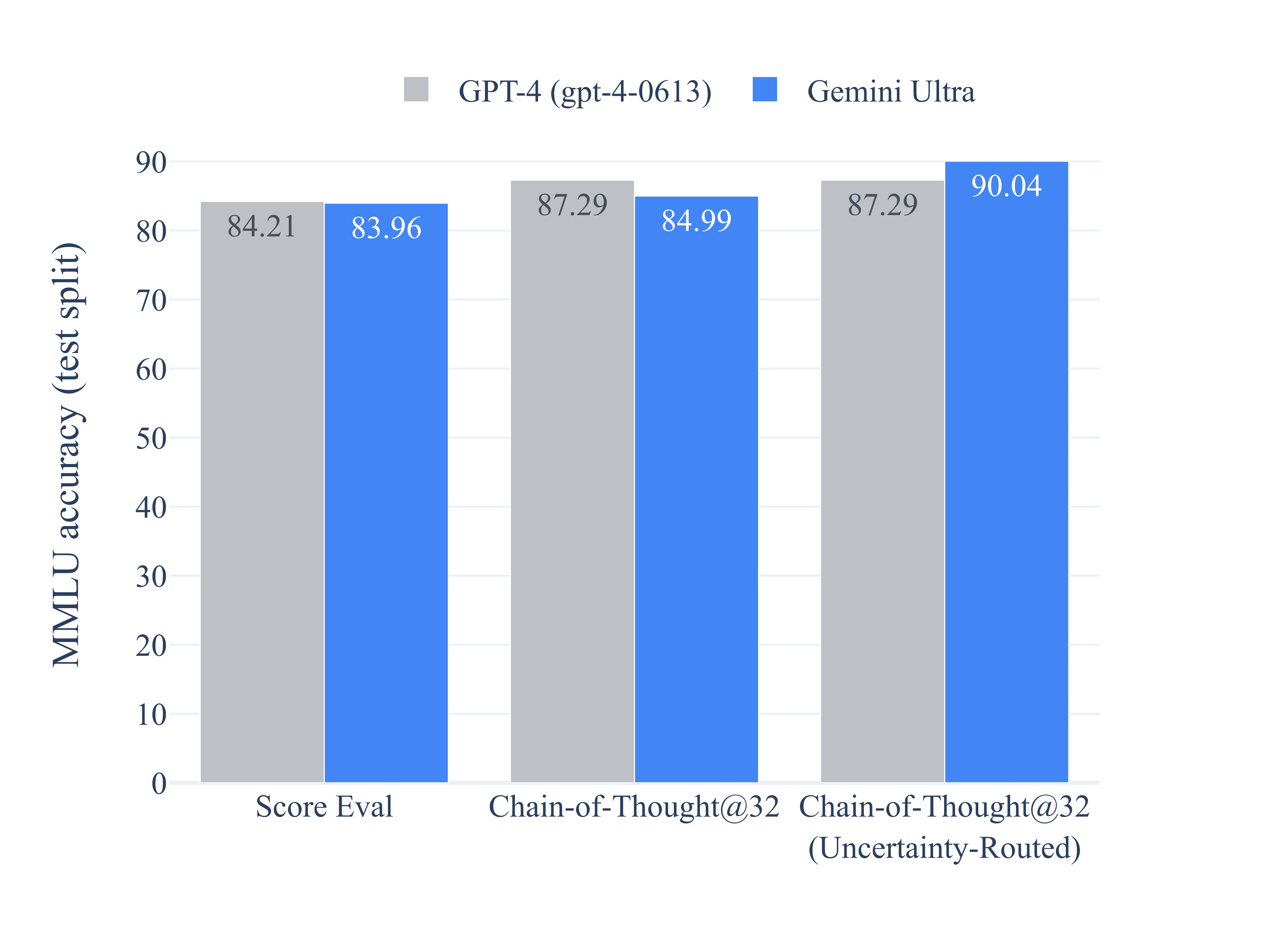

On MMLU (Hendrycks et al., 2021a), Gemini Ultra can outperform all existing models, achieving an accuracy of 90.04%. MMLU is a holistic exam benchmark, which measures knowledge across a set of 57 subjects. Human expert performance is gauged at 89.8% by the benchmark authors, and Gemini Ultra is the first model to exceed this threshold, with the prior state-of-the-art result at 86.4%. Achieving high performance requires specialist knowledge across many domains (e.g. law, biology, history, etc.), alongside reading comprehension and reasoning. We find Gemini Ultra achieves highest accuracy when used in combination with a chain-of-thought prompting approach (Wei et al., 2022) that accounts for model uncertainty. The model produces a chain of thought with k samples, for example 8 or 32. If there is a consensus above a preset threshold (selected based on the validation split), it selects this answer, otherwise it reverts to a greedy sample based on maximum likelihood choice without chain of thought. We refer the reader to appendix for a detailed breakdown of how this approach compares with only chain-of-thought prompting or only greedy sampling.

In mathematics, a field commonly used to benchmark the analytical capabilities of models, Gemini Ultra shows strong performance on both elementary exams and competition-grade problem sets. For the grade-school math benchmark, GSM8K (Cobbe et al., 2021), we find Gemini Ultra reaches 94.4% accuracy with chain-of-thought prompting and self-consistency (Wang et al., 2022) compared to the previous best accuracy of 92% with the same prompting technique. Similar positive trends are observed in increased difficulty math problems drawn from middle- and high-school math competitions (MATH benchmark), with the Gemini Ultra model outperforming all competitor models, reaching 53.2% using 4-shot prompting. The model also outperforms the state of the art on even harder tasks derived from American Mathematical Competitions (150 questions from 2022 and 2023). Smaller models perform poorly on this challenging task scoring close to random, but Gemini Ultra can solve 32% of the questions, compared to the 30% solve rate for GPT-4.

Gemini Ultra also excels in coding, a popular use case of current LLMs. We evaluate the model on many conventional and internal benchmarks and also measure its performance as part of more complex reasoning systems such as AlphaCode 2 (see section 5.1.7 on complex reasoning systems). For example, on HumanEval, a standard code-completion benchmark (Chen et al., 2021) mapping function descriptions to Python implementations, instruction-tuned Gemini Ultra correctly implements 74.4% of problems. On a new held-out evaluation benchmark for python code generation tasks, Natural2Code, where we ensure no web leakage, Gemini Ultra achieves the highest score of 74.9%.

Table 2 | Gemini performance on text benchmarks with external comparisons and PaLM 2-L.

∗ The model produces a chain of thought with k = 8 or 32 samples, if there is a consensus above a threshold (chosen based on the validation split), it selects this answer, otherwise it reverts to a greedy sample. Further analysis in Appendix 9.1.

∗∗ Results self-collected via the API in Nov, 2023.

∗∗∗ Results shown use the decontaminated numbers from Touvron et al. (2023b) report as the most relevant comparison to Gemini models which have been decontaminated as well.

Evaluation on these benchmarks is challenging and may be affected by data contamination. We performed an extensive leaked data analysis after training to ensure the results we report here are as scientifically sound as possible, but still found some minor issues and decided not to report results on e.g. LAMBADA (Paperno et al., 2016). As part of the evaluation process, on a popular benchmark, HellaSwag (Zellers et al., 2019), we find that an additional hundred finetuning steps on specific website extracts corresponding to the HellaSwag training set (which were not included in Gemini pretraining set) improve the validation accuracy of Gemini Pro to 89.6% and Gemini Ultra to 96.0%, when measured with 1-shot prompting (we measured GPT-4 obtained 92.3% when evaluated 1-shot via the API). This suggests that the benchmark results are susceptible to the pretraining dataset composition. We choose to report HellaSwag decontaminated results only in a 10-shot evaluation setting. We believe there is a need for more robust and nuanced standardized evaluation benchmarks with no leaked data. So, we evaluate Gemini models on several new held-out evaluation datasets that were recently released, such as WMT23 and Math-AMC 2022-2023 problems, or internally generated from non-web sources, such as Natural2Code. We refer the reader to the appendix for a comprehensive list of our evaluation benchmarks.

Even so, model performance on these benchmarks gives us an indication of the model capabilities and where they may provide impact on real-world tasks. For example, Gemini Ultra's impressive reasoning and STEM competencies pave the way for advancements in LLMs within the educational domain⁴. The ability to tackle complex mathematical and scientific concepts opens up exciting possibilities for personalized learning and intelligent tutoring systems.

5.1.2. Trends In Capabilities

We investigate the trends in capabilities across the Gemini model family by evaluating them on a holistic harness of more than 50 benchmarks in six different capabilities, noting that some of the most notable benchmarks were discussed in the last section. These capabilities are: "Factuality" covering open/closed-book retrieval and question answering tasks; "Long-Context" covering longform summarization, retrieval and question answering tasks; "Math/Science" including tasks for mathematical problem solving, theorem proving, and scientific exams; "Reasoning" tasks that require arithmetic, scientific, and commonsense reasoning; "Multilingual" tasks for translation, summarization, and reasoning in multiple languages. Please see appendix for a detailed list of tasks included for each capability.

Figure 3 | Language understanding and generation performance of Gemini model family across different capabilities (normalized by the Gemini Pro model).

We observe consistent quality gains with increased model size in Figure 3, especially in reasoning, math/science, summarization and long-context. Gemini Ultra is the best model across the board for all six capabilities. Gemini Pro, the second-largest model in the Gemini family of models, is also quite competitive while being a lot more efficient to serve.

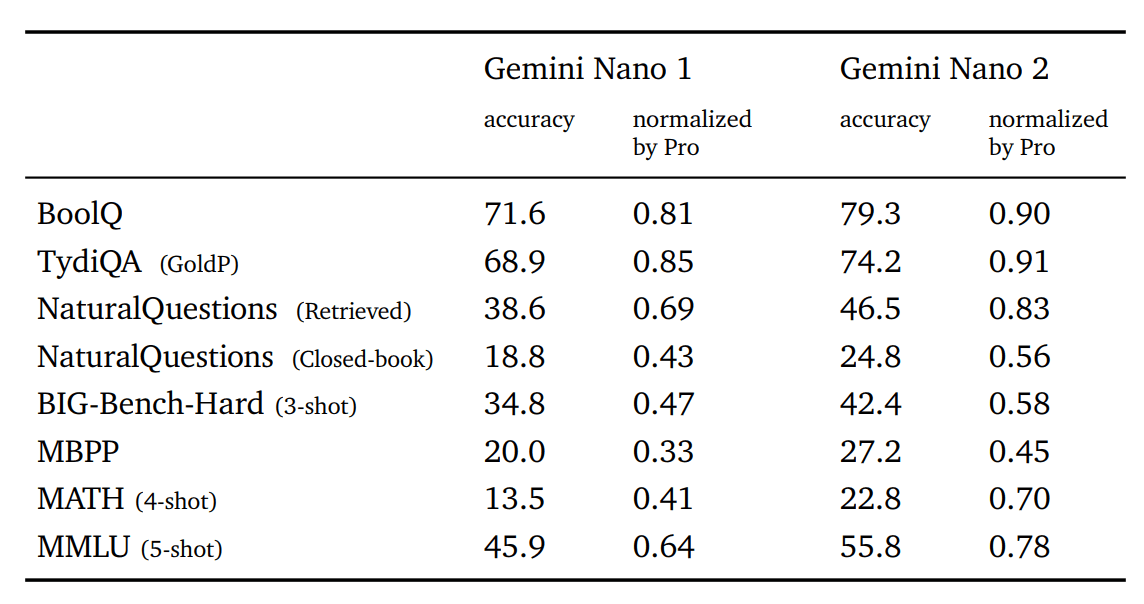

5.1.3. Nano

Bringing AI closer to the user, we discuss the Gemini Nano 1 and Nano 2 models engineered for on-device deployments. These models excel in summarization and reading comprehension tasks with per-task finetuning. Figure 3 shows the performance of these pretrained models in comparison to the much larger Gemini Pro model, while Table 3 dives deeper into specific factuality, coding, Math/Science, and reasoning tasks. Nano-1 and Nano-2 model sizes are only 1.8B and 3.25B parameters respectively. Despite their size, they show exceptionally strong performance on factuality, i.e. retrieval-related tasks, and significant performance on reasoning, STEM, coding, multimodal and multilingual tasks. With new capabilities accessible to a broader set of platforms and devices, the Gemini models expand accessibility to everyone.

Table 3 | Performance of Gemini Nano series on factuality, summarization, reasoning, coding and STEM tasks compared to significantly larger Gemini Pro model.

5.1.4. Multilinguality

The multilingual capabilities of the Gemini models are evaluated using a diverse set of tasks requiring multilingual understanding, cross-lingual generalization, and the generation of text in multiple languages. These tasks include machine translation benchmarks (WMT 23 for high-medium-low resource translation; Flores, NTREX for low and very low resource languages), summarization benchmarks (XLSum, Wikilingua), and translated versions of common benchmarks (MGSM: professionally translated into 11 languages).

Machine Translation

Translation is a canonical benchmark in machine learning with a rich history. We evaluated Gemini Ultra with instruction-tuning applied (see section 6.4.2) on the entire set of language pairs in the WMT 23 translation benchmark in a few-shot setting. Overall, we found that Gemini Ultra (and other Gemini models) performed remarkably well at translating from English to any other language, and surpassed the LLM-based translation methods when translating out-of-English, on high-resource, mid-resource and low-resource languages. In the WMT 23 out-of-English translation tasks, Gemini Ultra achieved the highest LLM-based translation quality, with an average BLEURT (Sellam et al., 2020) score of 74.8, compared to GPT-4's score of 73.6, and PaLM 2's score of 72.2. When averaged across all language pairs and directions for WMT 23, we see a similar trend with Gemini Ultra 74.4, GPT-4 73.8 and PaLM 2-L 72.7 average BLEURT scores on this benchmark.

Table 4 | Performance of Gemini models on WMT 23 translation benchmark. All numbers with 1-shot.

In addition to the languages and translation tasks above, we also evaluate Gemini Ultra on very low-resource languages. These languages were sampled from the tail of the following language sets: Flores-200 (Tamazight and Kanure), NTREX (North Ndebele), and an internal benchmark (Quechua). For these languages, both from and into English, Gemini Ultra achieved an average chrF score of 27.0 in 1-shot setup, while the next-best model, PaLM 2-L, achieved a score of 25.3.

Multilingual Math and Summarization

Beyond translation, we evaluated how well Gemini performs in challenging tasks across a range of languages. We specifically investigated the math benchmark MGSM (Shi et al., 2023), which is a translated variant of the math benchmark GSM8K (Cobbe et al., 2021). We find Gemini Ultra achieves an accuracy of 79.0%, an advance over PaLM 2-L which scores 74.7%, when averaged across all languages in an 8-shot setup. We also benchmark Gemini on the multilingual summarization benchmarks - XLSum (Hasan et al., 2021) and WikiLingua (Ladhak et al., 2020). In XLSum, Gemini Ultra reached an average of 17.6 rougeL score compared to 15.4 for PaLM 2. For Wikilingua, Gemini Ultra (5-shot) trails behind PaLM 2 (3-shot) measured in BLEURT score. See Table 5 for the full results. Overall the diverse set of multilingual benchmarks show that Gemini family models have a broad language coverage, enabling them to also reach locales and regions with low-resource languages.

Table 5 | Performance of Gemini models on multilingual math and summarization.

5.1.5. Long Context

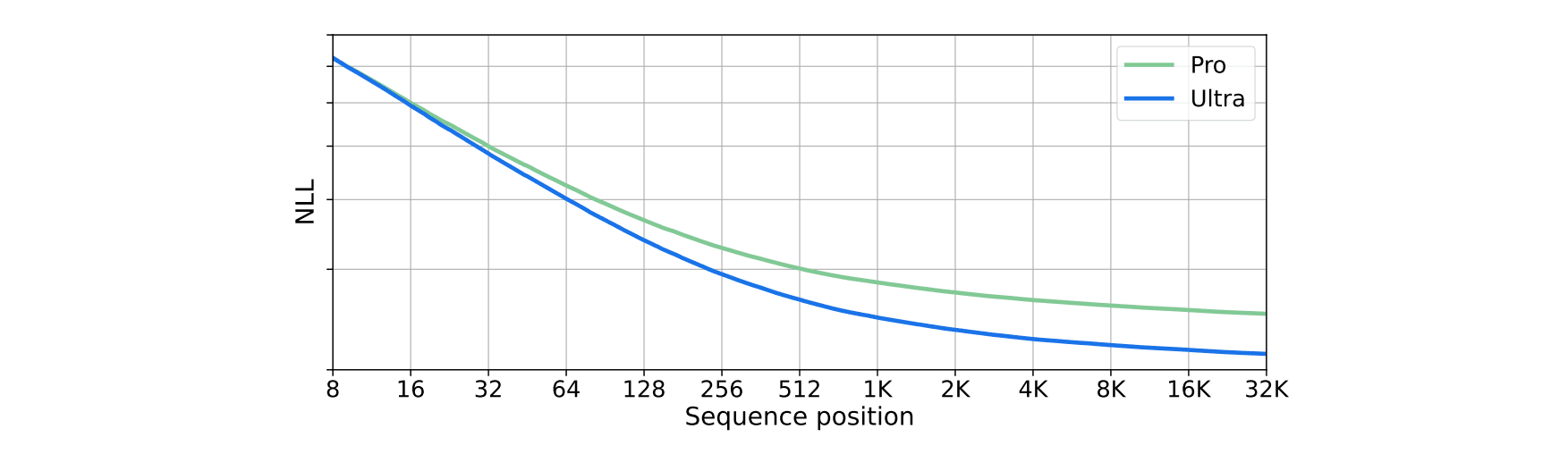

Gemini models are trained with a sequence length of 32,768 tokens and we find that they make use of their context length effectively. We first verify this by running a synthetic retrieval test: we place key-value pairs at the beginning of the context, then add long filler text, and ask for value associated with a particular key. We find that the Ultra model retrieves the correct value with 98% accuracy when queried across the full context length. We further investigate this by plotting the negative log likelihood (NLL) versus the token index across a held-out set of long documents in Figure 4. We find that the NLL decreases with sequence position up to the full 32K context length. The longer context length of Gemini models enable new use cases such as retrieval over documents and video understanding discussed in section 5.2.2.

Figure 4 | Negative log likelihood as a function of token index across 32K context length on a held-out set of long documents.

5.1.6. Human Preference Evaluations

Human preference of the model outputs provides an important indication of quality that complements automated evaluations. We have evaluated the Gemini models in side-by-side blind evaluations where human raters judge responses of two models to the same prompt. We instruction tune (Ouyang et al., 2022) the pretrained model using techniques discussed in the section 6.4.2. The instruction-tuned version of the model is evaluated on a range of specific capabilities, such as following instructions, creative writing, multimodal understanding, long-context understanding, and safety. These capabilities encompass a range of use cases inspired by current user needs and research-inspired potential future use cases.

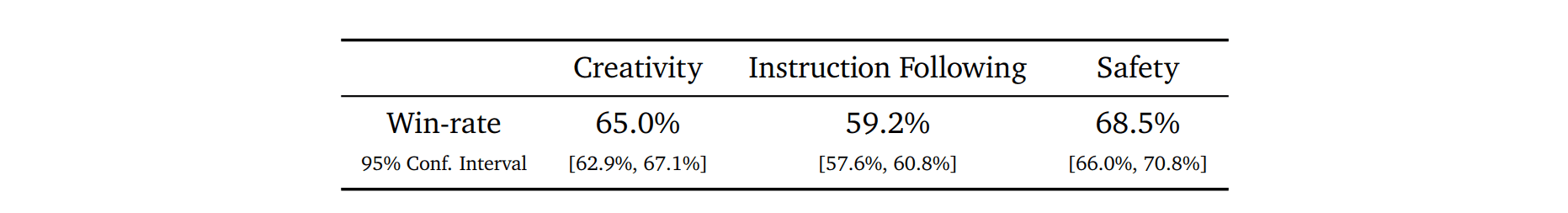

Instruction-tuned Gemini Pro models provide a large improvement on a range of capabilities, including preference for the Gemini Pro model over the PaLM 2 model API, 65.0% time in creative writing, 59.2% in following instructions, and 68.5% time for safer responses as shown in Table 6. These improvements directly translate into a more helpful and safer user experience.

Table 6 | Win rate of Gemini Pro over PaLM 2 (text-bison@001) with 95% confidence intervals.

5.1.7. Complex Reasoning Systems

Gemini can also be combined with additional techniques such as search and tool-use to create powerful reasoning systems that can tackle more complex multi-step problems. One example of such a system is AlphaCode 2, a new state-of-the-art agent that excels at solving competitive programming problems (Leblond et al, 2023). AlphaCode 2 uses a specialized version of Gemini Pro - tuned on competitive programming data similar to the data used in Li et al. (2022) - to conduct a massive search over the space of possible programs. This is followed by a tailored filtering, clustering and reranking mechanism. Gemini Pro is fine-tuned both to be a coding model to generate proposal solution candidates, and to be a reward model that is leveraged to recognize and extract the most promising code candidates.

AlphaCode 2 is evaluated on Codeforces,⁵ the same platform as AlphaCode, on 12 contests from division 1 and 2, for a total of 77 problems. AlphaCode 2 solved 43% of these competition problems, a 1.7x improvement over the prior record-setting AlphaCode system which solved 25%. Mapping this to competition rankings, AlphaCode 2 built on top of Gemini Pro sits at an estimated 85th percentile on average - i.e. it performs better than 85% of entrants. This is a significant advance over AlphaCode, which only outperformed 50% of competitors.

The composition of powerful pretrained models with search and reasoning mechanisms is an exciting direction towards more general agents; another key ingredient is deep understanding across a range of modalities which we discuss in the next section.

5.2. Multimodal

Gemini models are natively multimodal. These models exhibit the unique ability to seamlessly combine their capabilities across modalities (e.g. extracting information and spatial layout out of a table, a chart, or a figure) with the strong reasoning capabilities of a language model (e.g. its state-of-art-performance in math and coding) as seen in examples in Figures 5 and 12. The models also show strong performance in discerning fine-grained details in inputs, aggregating context across space and time, and applying these capabilities over a temporally-related sequence of video frames and/or audio inputs.

The sections below provide more detailed evaluation of the model across different modalities (image, video, and audio), together with qualitative examples of the model's capabilities for image generation and the ability to combine information across different modalities.

5.2.1. Image Understanding

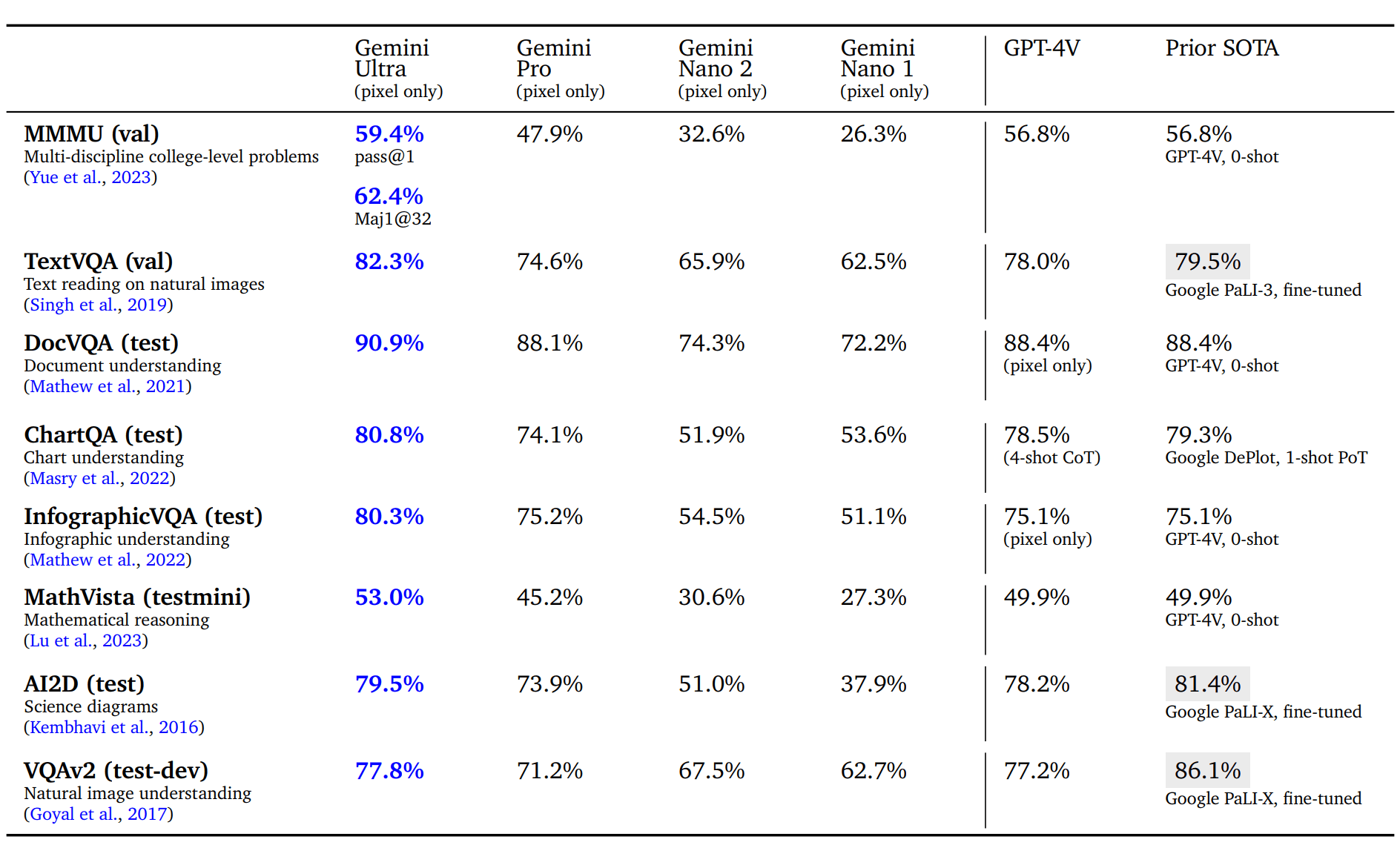

We evaluate the model on four different capabilities: high-level object recognition using captioning or question-answering tasks such as VQAv2; fine-grained transcription using tasks such as TextVQA and DocVQA requiring the model to recognize low-level details; chart understanding requiring spatial understanding of input layout using ChartQA and InfographicVQA tasks; and multimodal reasoning using tasks such as Ai2D, MathVista and MMMU. For zero-shot QA evaluation, the model is instructed to provide short answers aligned with the specific benchmark. All numbers are obtained using greedy sampling and without any use of external OCR tools.

Table 7 | Image understanding. Gemini Ultra consistently outperforms existing approaches even in zero-shot, especially for OCR-related image understanding tasks for natural images, text, documents, and figures without using any external OCR engine (‘pixel only’). Many existing approaches fine-tune on the respective tasks, highlighted in gray, which makes the comparison with 0-shot not apples-toapples.

We find that Gemini Ultra is state of the art across a wide range of image-understanding benchmarks in Table 7. It achieves strong performance across a diverse set of tasks such as answering questions on natural images and scanned documents as well as understanding infographics, charts and science diagrams. When compared against publicly reported results from other models (most notably GPT-4V), Gemini is better in zero-shot evaluation by a significant margin. It also exceeds several existing models that are specifically fine-tuned on the benchmark's training sets for the majority of tasks. The capabilities of the Gemini models lead to significant improvements in the state of the art on academic benchmarks like MathVista (+3.1%)⁶ or InfographicVQA (+5.2%).

MMMU (Yue et al., 2023) is a recently released evaluation benchmark, which consists of questions about images across 6 disciplines with multiple subjects within each discipline that require collegelevel knowledge to solve these questions. Gemini Ultra achieves the best score on this benchmark advancing the state-of-the-art result by more than 5 percentage points and outperforms the previous best result in 5 of 6 disciplines (see Table 8), thus showcasing its multimodal reasoning capabilities.

Table 8 | Gemini Ultra performance on the MMMU benchmark (Yue et al., 2023) per discipline. Each discipline covers multiple subjects, requiring college-level knowledge and complex reasoning.

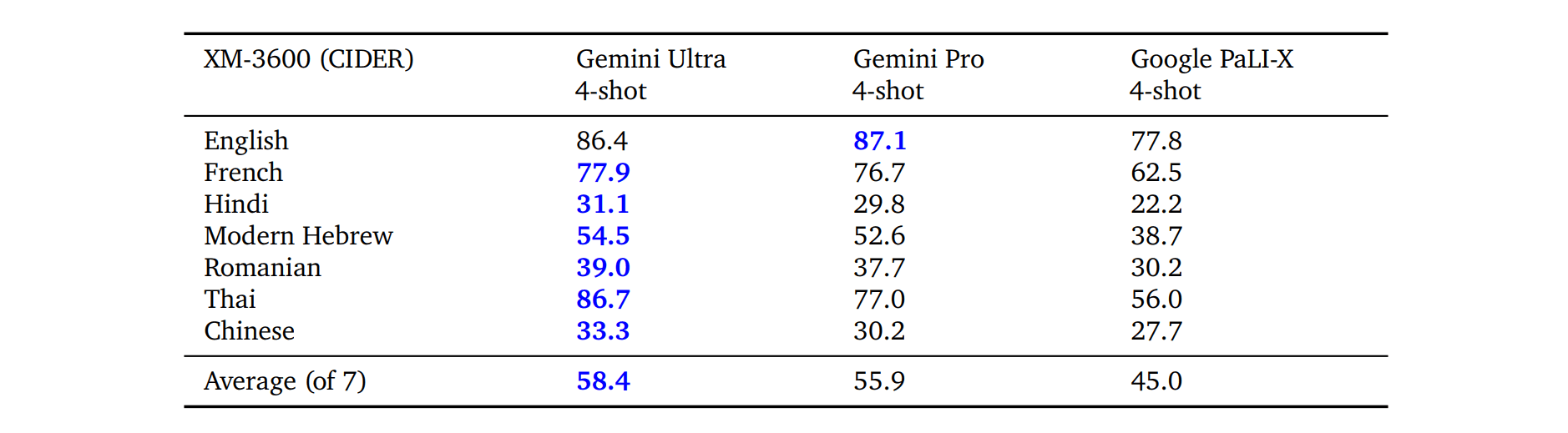

Gemini models are also capable of operating across modalities and a diverse set of global languages simultaneously, both for image understanding tasks (e.g., images containing text in Icelandic) and for generation tasks (e.g., generating image descriptions for a wide range of languages). We evaluate the performance of generating image descriptions on a selected subset of languages in the Crossmodal- 3600 (XM-3600) benchmark in a 4-shot setting, using the Flamingo evaluation protocol (Alayrac et al., 2022), without any fine-tuning for all models. As shown in Table 9, Gemini models achieve a significant improvement over the existing best model, Google PaLI-X.

Table 9 | Multilingual image understanding. Gemini models outperform existing models in captioning images in many languages when benchmarked on a subset of languages in XM-3600 dataset (Thapliyal et al., 2022).

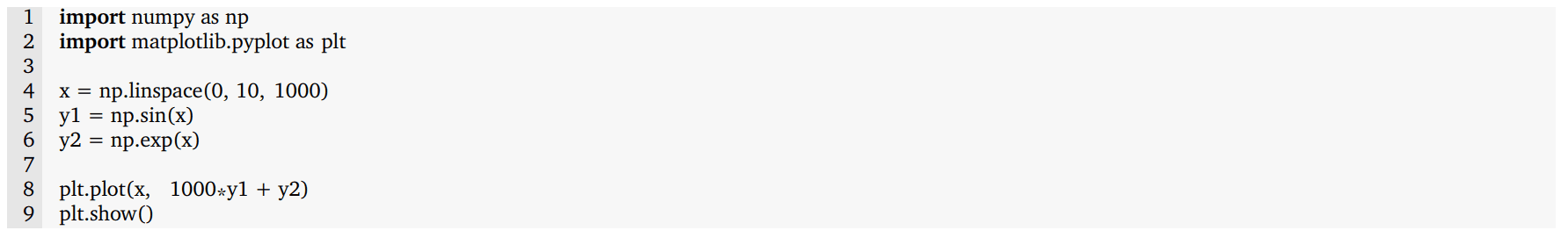

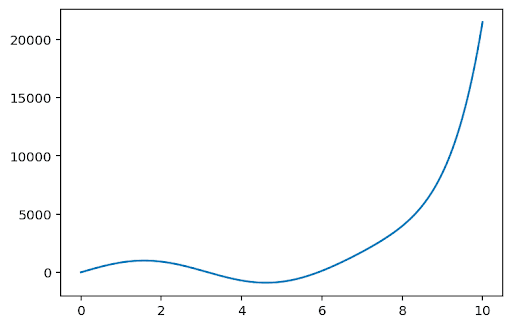

Figure 5 | Gemini’s multimodal reasoning capabilities to generate matplotlib code for rearranging the subplots. The multimodal prompt is shown at the top-left in gray. Gemini Ultra’s response, including its generated code, is shown in the right column in blue. The bottom left figure shows rendered version of the generated code. Successfully solving this task shows the model’s capability to combine several capabilities: (1) recognition of the functions depicted in the plots; (2) inverse graphics to infer the code that would have generated the subplots; (3) instruction-following to put subplots in their desired positions; and (4) abstract reasoning to infer that the exponential plot must stay in its original place, because the sine plot must move out of the way for the 3-dimensional plot.

Qualitative evaluation in Figure 5 illustrates an example of Gemini Ultra's multimodal reasoning capabilities. The model is required to solve the task of generating matplotlib code that would rearrange a set of subplots provided by the user. The model output shows that it successfully solves this task combining multiple capabilities of understanding the user plot, inferring the code required to generate it, following user instructions to put subplots in their desired positions, and abstract reasoning about the output plot. This highlights Gemini Ultra's native multimodality and eludes to its more complex reasoning abilities across interleaved sequences of image and text. We refer the reader to the appendix for more qualitative examples.

5.2.2. Video Understanding

Understanding video input is an important step towards a useful generalist agent. We measure the video understanding capability across several established benchmarks that are held-out from training. These tasks measure whether the model is able to understand and reason over a temporally-related sequence of frames. For each video task, we sample 16 equally-spaced frames from each video clip and feed them to the Gemini models. For the YouTube video datasets (all datasets except NextQA and the Perception test), we evaluate the Gemini models on videos that were still publicly available in the month of November, 2023.

Gemini Ultra achieves state-of-the-art results on various few-shot video captioning tasks as well as zero-shot video question answering tasks as shown in Table 10. This demonstrates its capability of strong temporal reasoning across several frames. Figure 21 in the appendix provides a qualitative example of understanding the video of the ball-striking mechanics of a soccer player and reasoning about the player can improve their game.

Table 10 | Few-shot video understanding across tasks and languages on selected academic benchmarks. The reported metric is CIDER for video captioning, WUPS for NextQA, and top-1 accuracy for the Perception Test and ActivityNet-QA. For ActivityNet-QA, we use the Video-LLAVA (Lin et al., 2023) evaluation protocol.

5.2.3. Image Generation

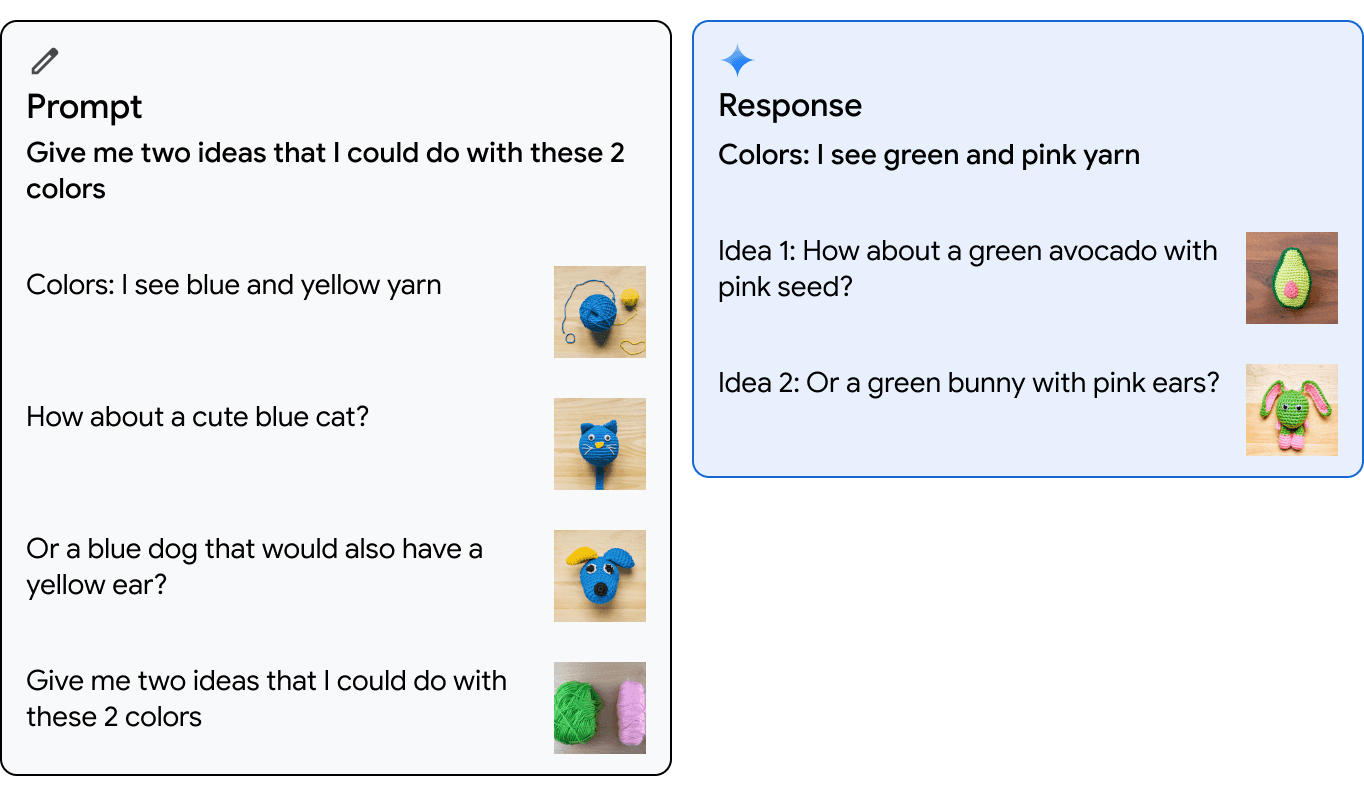

Gemini is able to output images natively, without having to rely on an intermediate natural language description that can bottleneck the model's ability to express images. This uniquely enables the model to generate images with prompts using interleaved sequences of image and text in a few-shot setting. For example, the user might prompt the model to design suggestions of images and text for a blog post or a website (see Figure 10 in the appendix).

Figure 6 shows an example of image generation in 1-shot setting. Gemini Ultra model is prompted with one example of interleaved image and text where the user provides two colors (blue and yellow) and image suggestions of creating a cute blue cat or a blue dog with yellow ear from yarn. The model is then given two new colors (pink and green) and asked for two ideas about what to create using these colors. The model successfully generates an interleaved sequence of images and text with suggestions to create a cute green avocado with pink seed or a green bunny with pink ears from yarn.

Figure 6 | Image Generation. Gemini can output multiple images interleaved with text given a prompt composed of image and text. In the left figure, Gemini Ultra is prompted in a 1-shot setting with a user example of generating suggestions of creating cat and dog from yarn when given two colors, blue and yellow. Then, the model is prompted to generate creative suggestions with two new colors, pink and green, and it generates images of creative suggestions to make a cute green avocado with pink seed or a green bunny with pink ears from yarn as shown in the right figure.

5.2.4. Audio Understanding

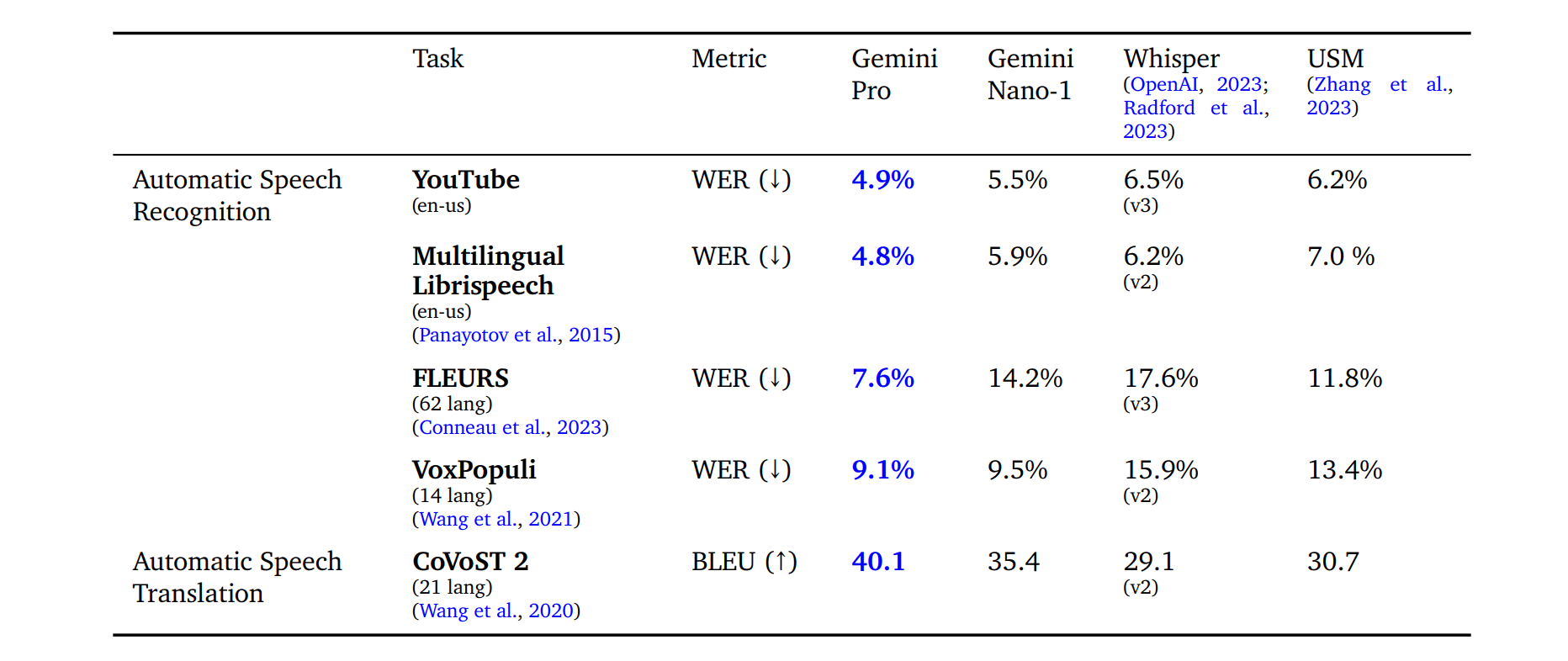

We evaluate the Gemini Nano-1 and Gemini Pro models on a variety of public benchmarks and compare it with Universal Speech Model (USM) (Zhang et al., 2023) and Whisper (large-v2 (Radford et al., 2023) or large-v3 (OpenAI, 2023) as indicated). These benchmarks include automatic speech recognition (ASR) tasks such as FLEURS (Conneau et al., 2023), VoxPopuli, (Wang et al., 2021), Multi-lingual Librispeech (Panayotov et al., 2015), as well as the speech translation task CoVoST 2, translating different languages into English (Wang et al., 2020). We also report on an internal benchmark YouTube test set. ASR tasks report a word error rate (WER) metric, where a lower number is better. Translation tasks report a BiLingual Evaluation Understudy (BLEU) score, where a higher number is better. FLEURS is reported on 62 languages that have language overlap with the training data. Four segmented languages (Mandarin, Japanese, Korean and Thai) report character error rate (CER), instead of WER, similar to Whisper (Radford et al., 2023).

Table 11 indicates that our Gemini Pro model significantly outperforms the USM and Whisper models across all ASR and AST tasks, both for English and multilingual test sets. Note that there is a large gain in FLEURS, compared to USM and Whisper, as our model is also trained with the FLEURS training dataset. However, training the same model without FLEURS dataset results in a WER of 15.8, which still outperforms Whisper. Gemini Nano-1 model also outperforms both USM and Whisper on all datasets except FLEURS. Note that we did not evaluate Gemini Ultra on audio yet, though we expect better performance from increased model scale.

Table 11 | Speech evaluation results on selected benchmarks for ASR and AST. For ASR, the reported metric is WER where lower is better. For AST, the reported metric is BLEU where higher is better.

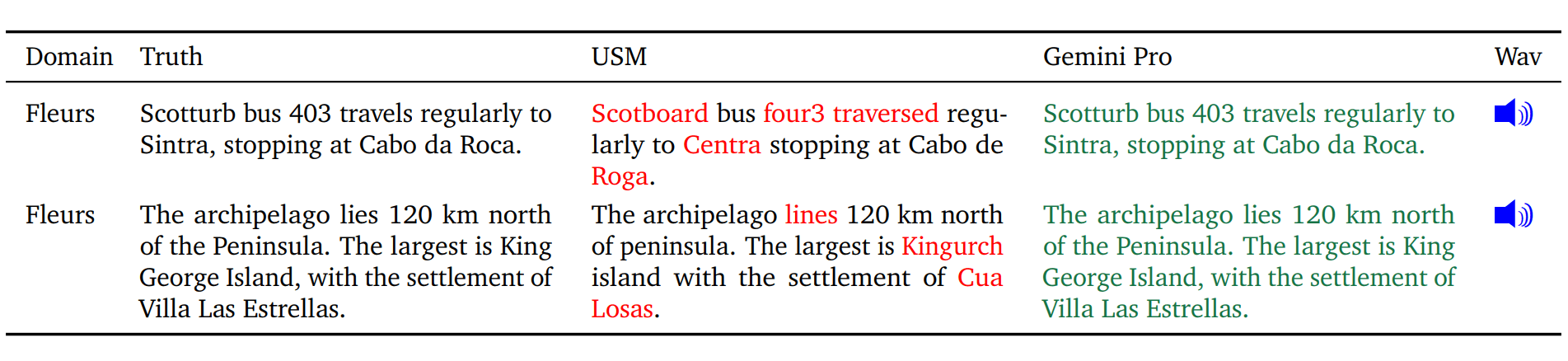

Table 12 shows further error analysis with USM and Gemini Pro. We find that Gemini Pro produces more understandable responses, particularly on rare words and proper nouns.

- Wav:

- https://storage.googleapis.com/deepmind-media/gemini/fleurs1.wav

- https://storage.googleapis.com/deepmind-media/gemini/fleurs2.wav

Table 12 | Qualitative examples for the ASR task in the benchmark. Incorrect transcriptions are highlighted in red.

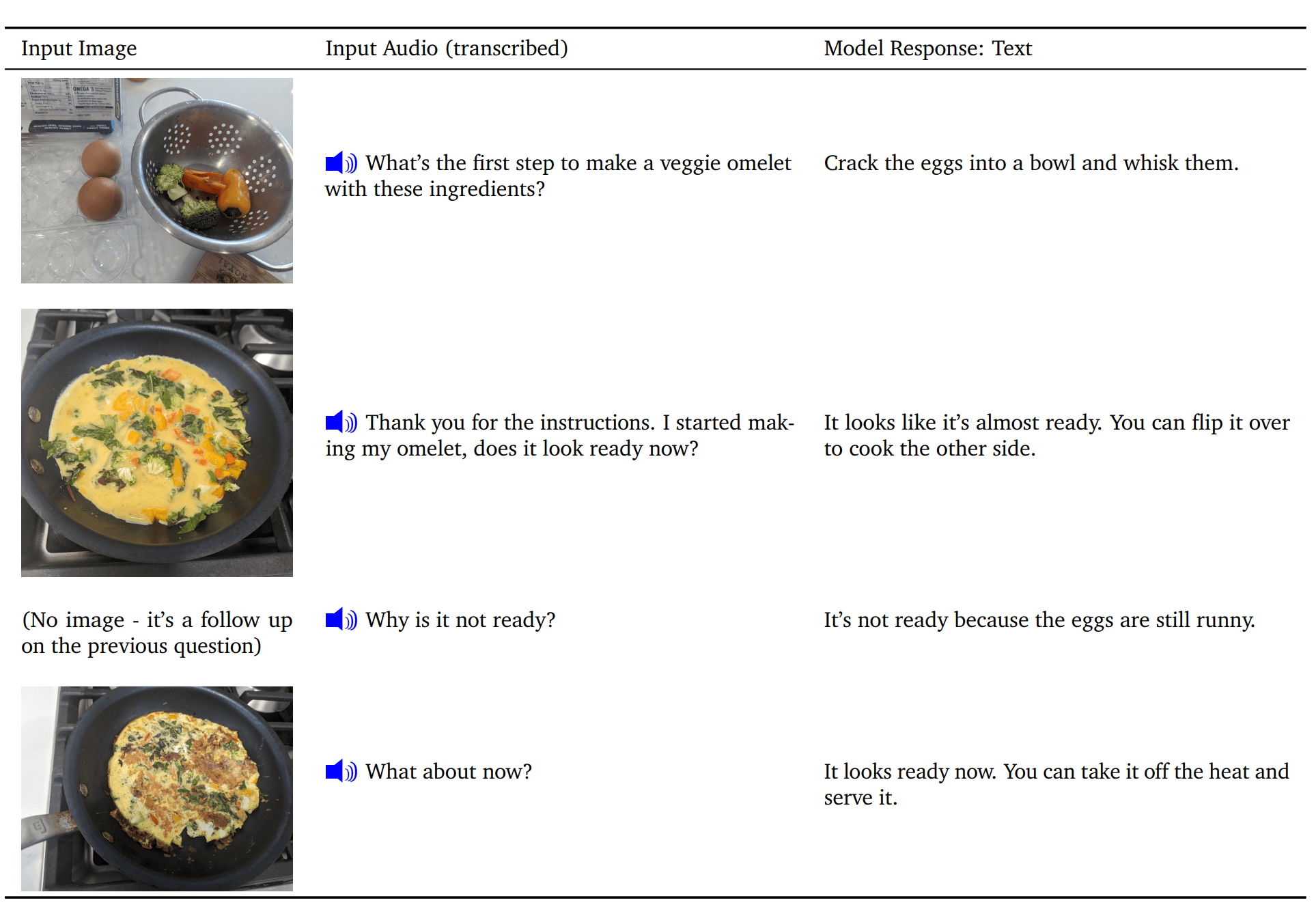

5.2.5. Modality Combination

Multimodal demonstrations often include a combination of text interleaved with a single modality, usually images. We demonstrate the ability to process a sequence of audio and images natively.

Consider a cooking scenario about making an omelet where we prompt the model with a sequence of audio and images. Table 13 indicates a turn-by-turn interaction with the model, providing pictures and verbally asking questions about the next steps for cooking an omelet. We note that the model response text is reasonably accurate, and shows that model processes fine-grained image details to evaluate when the omelet is fully cooked. See demo on the website.

- Wav:

- https://storage.googleapis.com/deepmind-media/gemini/gemini_av_input_1.wav

- https://storage.googleapis.com/deepmind-media/gemini/gemini_av_input_2.wav

- https://storage.googleapis.com/deepmind-media/gemini/gemini_av_input_3.wav

- https://storage.googleapis.com/deepmind-media/gemini/gemini_av_input_4.wav

Table 13 | Audio-visual qualitative example showcasing the ability of Gemini models to process interleaved sequences of text, vision, and audio, as well as reason across modalities. This example inputs interleaved images and audio from the user in a cooking scenario. The user prompts the model for instructions to make an omelet and to inspect whether it is fully cooked.

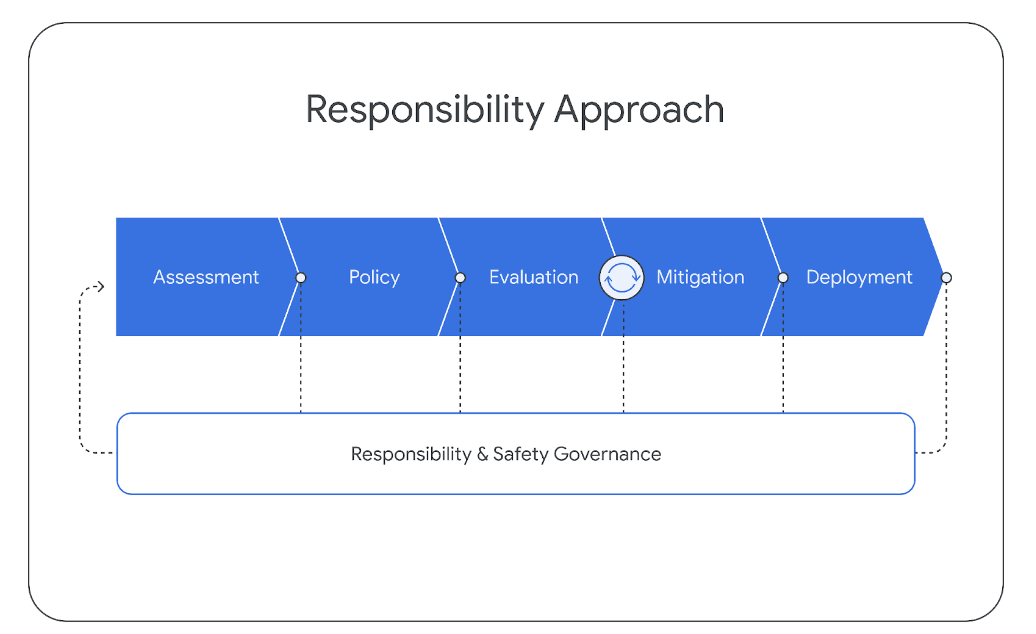

6. Responsible Deployment

During the development of the Gemini models, we follow a structured approach to responsible deployment in order to identify, measure, and manage foreseeable downstream societal impacts of our models, in line with previous releases of Google's AI technology (Kavukcuoglu et al., 2022). Throughout the lifecycle of the project, we follow the structure below. This section outlines our broad approach and key findings through this process. We will share more details on this in an upcoming report.

6.1. Impact Assessment

We develop model impact assessments to identify, assess, and document key downstream societal benefits and harms associated with the development of advanced Gemini models. These are informed by prior academic literature on language model risks (Weidinger et al., 2021), findings from similar prior exercises conducted across the industry (Anil et al., 2023; Anthropic, 2023; OpenAI, 2023a), ongoing engagement with experts internally and externally, and unstructured attempts to discover new model vulnerabilities. Areas of focus include: factuality, child safety, harmful content, cybersecurity, biorisk, representation and inclusivity. These assessments are updated in tandem with model development.

Impact assessments are used to guide mitigation and product delivery efforts, and inform deployment decisions. Gemini impact assessments spanned across different capabilities of Gemini models, assessing the potential consequences of these capabilities with Google's AI Principles (Google, 2023).

6.2. Model Policy

Building upon this understanding of known and anticipated effects, we developed a set of "model policies" to steer model development and evaluations. Model policy definitions act as a standardized criteria and prioritization schema for responsible development and as an indication of launch-readiness. Gemini model policies cover a number of domains including: child safety, hate speech, factual accuracy, fairness and inclusion, and harassment.

6.3. Evaluations

To assess the Gemini models against policy areas and other key risk areas identified within impact assessments, we developed a suite of evaluations across the lifecycle of model development.

Development evaluations are conducted for the purpose of 'hill-climbing' throughout training and fine-tuning Gemini models. These evaluations are designed by the Gemini team, or are assessments against external academic benchmarks. Evaluations consider issues such as helpfulness (instruction following and creativity), safety and factuality. See section 5.1.6 and the next section on mitigations for a sample of results.

Assurance evaluations are conducted for the purpose of governance and review, usually at the end of key milestones or training runs by a group outside of the model development team. Assurance evaluations are standardized by modality and datasets are strictly held-out. Only high-level insights are fed back into the training process to assist with mitigation efforts. Assurance evaluations include testing across Gemini policies, and include ongoing testing for dangerous capabilities such as potential biohazards, persuasion, and cybersecurity (Shevlane et al., 2023).

External evaluations are conducted by partners outside of Google to identify blindspots. External groups stress-test our models across a range of issues, including across areas listed in the White House Commitments,⁷ and tests are conducted through a mixture of structured evaluations and unstructured red teaming. The design of these evaluations are independent and results are reported periodically to the Google DeepMind team.

In addition to this suite of external evaluations, specialist internal teams conduct ongoing red teaming of our models across areas such as the Gemini policies and security. These activities include less structured processes involving sophisticated adversarial attacks to identify new vulnerabilities. Discovery of potential weaknesses can then be used to mitigate risks and improve evaluation approaches internally. We are committed to ongoing model transparency and plan to share additional results from across our evaluation suite over time.

6.4. Mitigations

Mitigations are developed in response to the outcomes of the assessment, policy, and evaluation approaches described above. Evaluations and mitigations are used in an iterative way, with evaluations being re-run following mitigation efforts. We discuss our efforts on mitigating model harms across data, instruction-tuning, and factuality below.

6.4.1. Data

Prior to training, we take various steps to mitigate potential downstream harms at the data curation and data collection stage. As discussed in the section on "Training Data", we filter training data for high-risk content and to ensure all training data is sufficiently high quality. Beyond filtering, we also take steps to ensure all data collected meets Google DeepMind's best practices on data enrichment,⁸ developed based on the Partnership on AI's "Responsible Sourcing of Data Enrichment Services"⁹. This includes ensuring all data enrichment workers are paid at least a local living wage.

6.4.2. Instruction Tuning

Instruction tuning encompasses supervised fine tuning (SFT) and reinforcement learning through human feedback (RLHF) using a reward model. We apply instruction tuning in both text and multimodal settings. Instruction tuning recipes are carefully designed to balance the increase in helpfulness with decrease in model harms related to safety and hallucinations (Bai et al., 2022a).

Curation of "quality" data is critical for SFT, reward model training, and RLHF. The data mixture ratios are ablated with smaller models to balance the metrics on helpfulness (such as instruction following, creativity) and reduction of model harms, and these results generalize well to larger models. We have also observed that data quality is more important than quantity (Touvron et al., 2023b; Zhou et al., 2023), especially for larger models. Similarly, for reward model training, we find it critical to balance the dataset with examples where the model prefers to say, "I cannot help with that," for safety reasons and examples where the model outputs helpful responses. We use multi-objective optimization with a weighted sum of reward scores from helpfulness, factuality, and safety, to train a multi-headed reward model.

We further elaborate our approach to mitigate risks of harmful text generation. We enumerate approximately 20 harm types (e.g. hate speech, providing medical advice, suggesting dangerous behavior) across a wide variety of use cases. We generate a dataset of potential harm-inducing queries in these categories, either manually by policy experts and ML engineers, or via prompting high capability language models with topical keywords as seeds.

Given the harm-inducing queries, we probe our Gemini models and analyze the model responses via side-by-side evaluation. As discussed above, we balance the objective of model output response being harmless versus being helpful. From the detected risk areas, we create additional supervised fine-tuning data to demonstrate the desirable responses. To generate such responses at scale, we heavily rely on a custom data generation recipe loosely inspired from Constitutional AI (Bai et al., 2022b), where we inject variants of Google's content policy language as "constitutions", and utilize language model's strong zero-shot reasoning abilities (Kojima et al., 2022) to revise responses and choose between multiple response candidates. We have found this recipe to be effective - for example in Gemini Pro, this overall recipe was able to mitigate a majority of our identified text harm cases, without any perceptible decrease on response helpfulness.

6.4.3. Factuality

It is important that our models generate responses that are factual in a variety of scenarios, and to reduce the frequency of hallucinations. We focused instruction tuning efforts on three key desired behaviors, reflecting real-world scenarios:

- Attribution: If instructed to generate a response that should be fully attributed to a given context in the prompt, Gemini should produce a response with the highest degree of faithfulness to the context (Rashkin et al., 2023). This includes the summarization of a user-provided source, generating fine-grained citations given a question and provided snippets akin to Menick et al. (2022); Peng et al. (2023), answering questions from a long-form source such as a book (Mihaylov et al., 2018), and transforming a given source to a desired output (e.g. an email from a portion of a meeting transcript).

- Closed-Book Response Generation: If provided with a fact-seeking prompt without any given source, Gemini should not hallucinate incorrect information (see Section 2 of Roberts et al. (2020) for a definition). These prompts can range from information-seeking prompts (e.g. "Who is the prime minister of India?") to semi-creative prompts that may request factual information (e.g. "Write a 500-word speech in favor of the adoption of renewable energy").

- Hedging: If prompted with an input such that it is "unanswerable", Gemini should not hallucinate. Rather, it should acknowledge that it cannot provide a response by hedging. These include scenarios where the input prompt contains false-premise questions (see examples in Hu et al. (2023)), the input prompt instructs the model to perform open-book QA, but the answer is not derivable from the given context, and so forth.

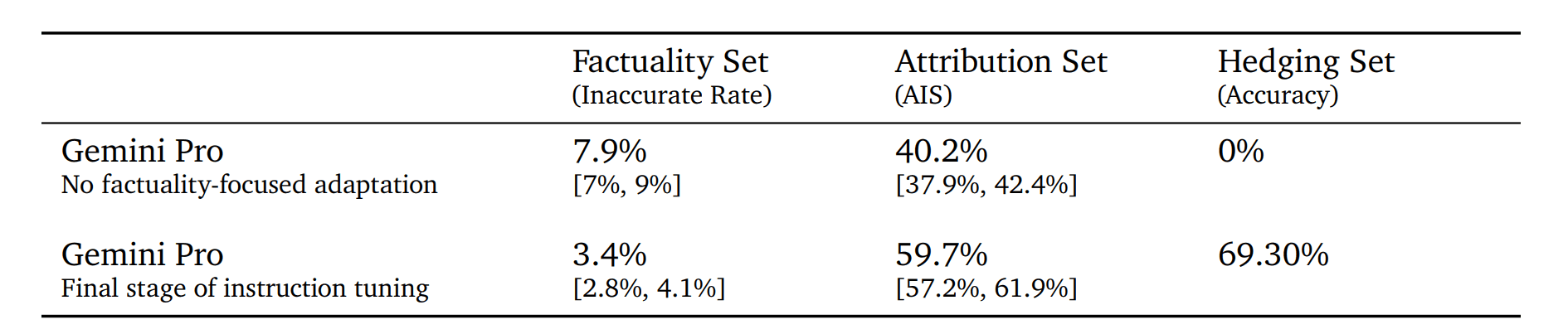

We elicited these desired behaviors from Gemini models by curating targeted supervised-fine tuning datasets and performing RLHF. Note that the results produced here do not include endowing Gemini with tools or retrieval that purportedly could boost factuality (Menick et al., 2022; Peng et al., 2023). We provide three key results on respective challenge sets below.

- Factuality Set :An evaluation set containing fact-seeking prompts (primarily closed-book). This is evaluated via human annotators who fact-check each response manually; we report the percentage of factually-inaccurate responses as judged by annotators.

- Attribution Set: An evaluation set containing a variety of prompts that require attribution to sources in the prompt. This is evaluated via human annotators who check for attribution to sources in the prompt for each response manually; the reported metric is AIS (Rashkin et al., 2023).

- Hedging Set: An automatic evaluation setup where we measure whether Gemini models hedge accurately.

We compare Gemini Pro with a version of instruction-tuned Gemini Pro model without any factualityfocused adaptation in Table 14. We observe that the rate of inaccuracy is halved in the factuality set, the accuracy of attribution is increased by 50% from the attribution set, and the model successfully hedges 70% (up from 0%) in the provided hedging set task.

Table 14 | Factuality mitigations: Impact of instruction-tuning on the rate of inaccuracy, presence of attribution and the rate of accurate hedging (with corresponding 95% confidence intervals).

6.5. Deployment

Following the completion of reviews, model cards for each approved Gemini model are created for structured and consistent internal documentation of critical performance and responsibility metrics as well as to inform appropriate external communication of these metrics over time.

6.6. Responsible Governance

Across the responsible development process, we undertake ethics and safety reviews with the Google DeepMind's Responsibility and Safety Council (RSC),¹⁰ an interdisciplinary group which evaluates Google DeepMind's projects, papers and collaborations against Google's AI Principles. The RSC provides input and feedback on impact assessments, policies, evaluations and mitigation efforts. During the Gemini project, the RSC set specific evaluation targets across key policy domains (e.g. child safety).

7. Discussion And Conclusion

We have presented Gemini, a new family of models that advance multimodal model capabilities in text, code, image, audio, and video. This technical report evaluates the capabilities of Gemini on a diverse set of widely-studied benchmarks, and our most capable model Gemini Ultra makes significant advances across the board. In the natural language domain, the performance gains from careful developments in data and model training at scale continue to deliver quality improvements, setting new state of the art in several benchmarks. In particular, Gemini Ultra surpasses human-expert performance on the exam benchmark MMLU, scoring 90.0%, which has been a defacto measure of progress for LLMs ever since it was first released in 2020. In the multimodal domain, Gemini Ultra sets new state of the art on most of the image understanding, video understanding, and audio understanding benchmarks without task-specific modifications or tuning. In particular, Gemini Ultra's multimodal reasoning capabilities are evident from its state-of-the-art performance on the recent MMMU benchmark (Yue et al., 2023), that comprises questions about images requiring college-level subject knowledge and deliberate reasoning.

Beyond the state-of-art results on benchmarks, what we are most excited about is the new use cases enabled by Gemini models. The new capabilities of Gemini models to parse complex images, such as charts or infographics, reason over interleaved sequences of images, audio, and text, and generate interleaved text and images as responses open a wide variety of new applications. As shown in figures throughout the report and appendix, Gemini can enable new approaches in areas like education, everyday problem solving, multilingual communication, information summarization, extraction, and creativity. We expect that the users of these models will find all kinds of beneficial new uses that we have only scratched the surface of in our own investigations.

Despite their impressive capabilities, we should note that there are limitations to the use of LLMs. There is a continued need for ongoing research and development on "hallucinations" generated by LLMs to ensure that model outputs are more reliable and verifiable. LLMs also struggle with tasks requiring high-level reasoning abilities like causal understanding, logical deduction, and counterfactual reasoning even though they achieve impressive performance on exam benchmarks. This underscores the need for more challenging and robust evaluations to measure their true understanding as the current state-of-the-art LLMs saturate many benchmarks.

Gemini is a further step towards our mission to solve intelligence, advance science and benefit humanity, and we are enthusiastic to see how these models are used by our colleagues at Google and beyond. We build on many innovations in machine learning, data, infrastructure, and responsible development - areas that we have been pursuing at Google for over a decade. The models we present in this report provide a strong foundation towards our broader future goal to develop a large-scale, modularized system that will have broad generalization capabilities across many modalities.

References

Not included here. For detailed references, please consult the PDF version of the paper.

8. Contributions And Acknowledgments

The Contributor list is not included here. For detailed references, please consult the PDF version of the paper.

The roles are defined as below:

- Lead: Individual(s) responsible for the sub-team throughout the project.

- Core Contributor: Individual that had significant impact throughout the project.

- Contributor: Individual that had contributions to the project and was partially involved with the effort.

- Program Lead: Responsible for the organizational aspects of the Gemini effort

- Overall Technical Lead: Responsible for the technical direction of the overall Gemini effort

Within each role, contributions are equal, and are listed in a randomized order. Ordering within each role does not indicate ordering of the contributions.

Gemini is a cross-Google effort, with members from Google DeepMind (GDM), Google Research (GR), Knowledge and Information (K&I), Core ML, Cloud, Labs, and more.

We thank our reviewers and colleagues for their valuable discussions and feedback on the report — Alexandra Belias, Arielle Bier, Eleanor Tomlinson, Elspeth White, Emily Hossellman, Gaby Pearl, Helen King, Hollie Dobson, Jaclyn Konzelmann, Jason Gelman, Jennifer Beroshi, Joel Moss, Jon Small, Jonathan Fildes, Oli Gaymond, Priya Jhakra, Rebecca Bland, Reena Jana, and Tom Lue.

Our work is made possible by the dedication and efforts of numerous teams at Google. We would like to acknowledge the support from Abhi Mohan, Adekunle Bello, Aishwarya Nagarajan, Alejandro Lince, Alexander Chen, Alexander Kolbasov, Alexander Schiffhauer, Amar Subramanya, Ameya Shringi, Amin Vahdat, Anda Rabatić, Anthonie Gross, Antoine Yang, Anthony Green, Anton Ruddock, Art Khurshudov, Artemis Chen, Arthur Argenson, Avinatan Hassidim, Beiye Liu, Bin Ni, Brett Daw, Bryan Chiang, Burak Gokturk, Carey Radebaugh, Carl Crous, Carrie Grimes Bostock, Charbel Kaed, Charlotte Banks, Che Diaz, Chris Larkin, Christy Lian, Claire Cui, Clement Farabet, Daniel Herndon, Dave Burke, David Battle, David Engel, Dipannita Shaw, Donghyun Koo, Doug Ritchie, Dragos Stefanescu, Emre Sargin, Eric Herren, Estella King, Fatema Alkhanaizi, Fernando Pereira, Gabriel Carvajal, Gaurav Gandhi, Goran Pavičić, Harry Richardson, Hassan Wassel, Hongji Li, Igor Ivanisevic, Ivan Jambrešić, Ivan Jurin, Jade Fowler, Jay Yagnik, Jeff Seibert, Jenna LaPlante, Jessica Austin Jianxing Lu, Jin Huang, Jonathan Caton, Josh Woodward, Joshua Foster, Katrina Wong, Kelvin Nguyen, Kira Yin, Konstantin Sharlaimov, Kun Li, Lee Hong, Lilly Taylor, Longfei Shen, Luc Mercier, Mania Abdi, Manuel Sanchez, Mario Carlos Cortes III, Mehdi Ghissassi, Micah Mosley, Michael Bendersky, Michael Harris, Mihir Paradkar, Nandita Dukkipati, Nathan Carter, Nathan Watson, Nikhil Dandekar, Nishant Ranka, Obaid Sarvana, Olcan Sercinoglu, Olivier Lacombe, Pranesh Srinivasan, Praveen Kumar, Rahul Sukthankar, Raia Hadsell, Rajagopal Ananthanarayanan, Roberto Lupi, Rosie Zou, Sachin Menezes, Sadegh Jazayeri, Sameer Bidichandani, Sania Alex, Sanjiv Kumar, Sarah Fitzgerald, Sebastian Nowozin, Shannon Hepburn, Shayne Cardwell, Sissie Hsiao, Srinivasan Venkatachary, Sugato Basu, Sundar Pichai, Sundeep Tirumalareddy, Susannah Young, Swetha Vijayaraghavan, Tania Bedrax-Weiss, Terry Chen, Ting Liu, Tom Cobley, Tomas Izo, Trystan Upstill, Varun Singhai, Vedrana Klarić Trupčević, Victor Cai, Vladimir Pudovkin, Vu Dang, Wenbo Zhao, Wesley Crow, Wesley Szeng, Xiaodan Song, Yazhou Zu, Ye Tian, Yicong Wang, Yixing Wang, Zachary Jessup, Zhenchuan Pang, Zimeng Yang, and Zoubin Ghahramani. We'd also like to recognize the AlphaCode team, the Borg Scheduling team, the Facilities team, the Gemini Demo Team, the Global Server Ops (GSO) team, the JAX team, the the Legal team, ML SRE team, the ML Supercomputer (MLSC) team, the PartIR team, the Platforms Infrastructure Engineering (PIE) team, and the XLA Compiler team,.

We thank everyone at Google not explicitly mentioned above, who have shared excitement, given feedback on early Gemini models or created interesting demo uses of Gemini, and worked with or supported the core Gemini team on many aspects of this project.

9. Appendix

9.1. Chain-Of-Thought Comparisons On Mmlu Benchmark

We contrast several chain-of-thought approaches on MMLU and discuss their results in this section. We proposed a new approach where model produces k chain-of-thought samples, selects the majority vote if the model is confident above a threshold, and otherwise defers to the greedy sample choice. The thresholds are optimized for each model based on their validation split performance. The proposed approach is referred to as uncertainty-routed chain-of-thought. The intuition behind this approach is that chain-of-thought samples might degrade performance compared to the maximum-likelihood decision when the model is demonstrably inconsistent. We compare the gains from the proposed approach on both Gemini Ultra and GPT-4 in Figure 7. We find that Gemini Ultra benefits more from this approach compared to using only chain-of-thought samples. GPT-4's performance improves from 84.2% with greedy sampling to 87.3% with uncertainty-routed chain-of-thought approach with 32 samples, but it already achieves these gains from using 32 chain-of-thought samples. In contrast, Gemini Ultra improves its performance significantly from 84.0% with greedy sampling to 90.0% with uncertainty-routed chain-of-thought approach with 32 samples while it marginally improves to 85.0% with the use of 32 chain-of-thought samples only.

Figure 7 | Chain-of-Thought with uncertainty routing on MMLU.

9.2. Capabilities And Benchmarking Tasks

We use more than 50 benchmarks as a holistic harness to evaluate the Gemini models across text, image, audio and video. We provide a detailed list of benchmarking tasks for six different capabilities in text understanding and generation: factuality, long context, math/science, reasoning, summarization, and multilinguality. We also enumerate the benchmarks used for image understanding, video understanding, and audio understanding tasks.

- Factuality: We use 5 benchmarks: BoolQ (Clark et al., 2019), NaturalQuestions-Closed (Kwiatkowski et al., 2019), NaturalQuestions-Retrieved (Kwiatkowski et al., 2019), RealtimeQA (Kasai et al., 2022), TydiQA-noContext and TydiQA-goldP (Clark et al., 2020).

- Long Context: We use 6 benchmarks: NarrativeQA (Kočiský et al., 2018), Scrolls-Qasper, Scrolls-Quality (Shaham et al., 2022), XLsum (En), XLSum (non-English languages) (Hasan et al., 2021), and one other internal benchmark.

- Math/Science: We use 8 benchmarks: GSM8k (with CoT) (Cobbe et al., 2021), Hendryck's MATH pass@1 (Hendrycks et al., 2021b), MMLU (Hendrycks et al., 2021a), Math-StackExchange, Math-AMC 2022-2023 problems, and three other internal benchmarks.

- Reasoning: We use 7 benchmarks: BigBench Hard (with CoT) (Srivastava et al., 2022), CLRS (Veličković et al., 2022), Proof Writer (Tafjord et al., 2020), Reasoning-Fermi problems (Kalyan et al., 2021), Lambada (Paperno et al., 2016), HellaSwag (Zellers et al., 2019), DROP (Dua et al., 2019).

- Summarization: We use 5 benchmarks: XL Sum (English), XL Sum (non-English languages) (Hasan et al., 2021), WikiLingua (non-English languages), WikiLingua (English) (Ladhak et al., 2020), XSum (Narayan et al., 2018).

- Multilinguality: We use 10 benchmarks: XLSum (Non-English languages) (Hasan et al., 2021), WMT22 (Kocmi et al., 2022), WMT23 (Tom et al., 2023), FRMT (Riley et al., 2023), WikiLingua (Non-English languages) (Ladhak et al., 2020), TydiQA (no context), TydiQA (GoldP) (Clark et al., 2020), MGSM (Shi et al., 2023), translated MMLU (Hendrycks et al., 2021a), NTREX (Federmann et al., 2022), FLORES-200 (Team et al., 2022).

- Image and Video: We use 9 benchmarks for image understanding: MMMU (Yue et al., 2023), TextVQA (Singh et al., 2019), DocVQA (Mathew et al., 2021), ChartQA (Masry et al., 2022), InfographicVQA (Mathew et al., 2022), MathVista (Lu et al., 2023), AI2D (Kembhavi et al., 2016), VQAv2 (Goyal et al., 2017), XM3600 (Thapliyal et al., 2022) for multi-lingual image understanding, and 6 benchmarks for video understanding: VATEX (Wang et al., 2019) for captioning in two different languages, YouCook2 (Zhou et al., 2018), NextQA (Xiao et al., 2021), ActivityNet-QA (Yu et al., 2019), and Perception Test MCQA (Pătrăucean et al., 2023).

- Audio: We use 5 benchmarks including automatic speech recognition (ASR) tasks such as FLEURS (Conneau et al., 2023), VoxPopuli, (Wang et al., 2021), Multi-lingual Librispeech (Panayotov et al., 2015), and automatic speech translation task such as CoVoST 2 (Wang et al., 2020).

9.3. Qualitative Examples

This section shows sample qualitative examples from prompting the Gemini Ultra model. Some illustrative examples of multimodal reasoning for image understanding tasks over charts, natural images and memes are shown in Figures 8, 9, 11, 13, 14, and 15. Figure 10 shows an example of image generation capabilities of Gemini Ultra where the user generates an interleaved sequence of image and text to design a blog post. Beyond English, Figure 16 shows model's capability to understand images in a multilingual setting. Gemini models also show strong performance on multimodal image understanding and reasoning in mathematics, as shown in Figures 12, 18 and 19. Figure 20 is an example of complex multimodal reasoning demonstrating how the model composes complex image understanding, code generation, and instruction following capabilities for a given user task. In Figure 17, we see another example of the model being able to generate working code and follow complex user instructions. Finally, Figure 21 shows an example of Gemini Ultra's capability of understanding video by reasoning over temporally connected set of frames.

9.3.1. Chart Understanding And Reasoning Over Data

Prompt

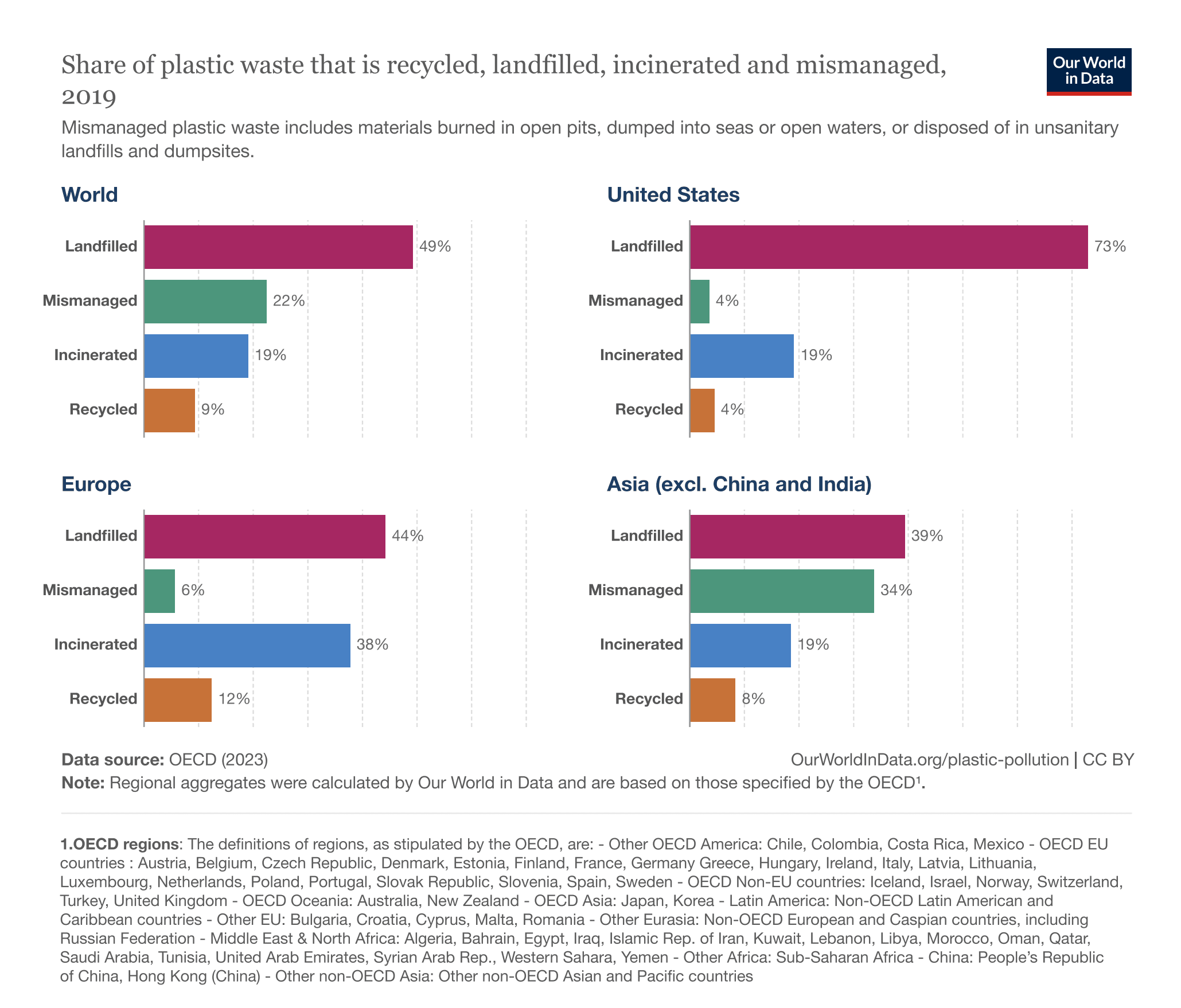

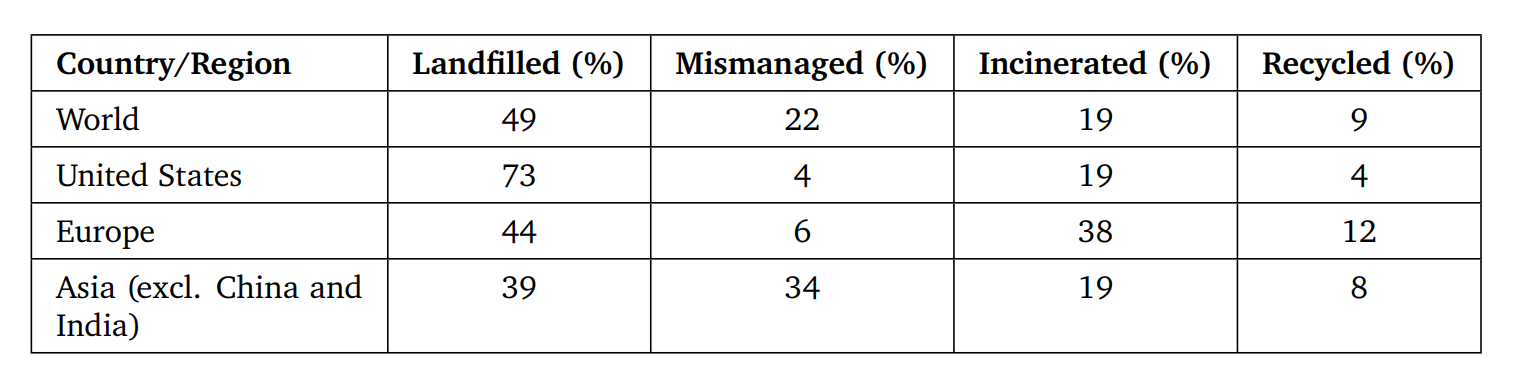

Spot a data point that stands out in these charts and what that implicates. Then produce a detailed markdown table for all the data shown.

Model Response (Rendered Markdown)

The data point that stands out is the United States' landfilled plastic waste, which is 73%. This is significantly higher than the other countries and regions shown. This implies that the United States is not doing as much to recycle or incinerate its plastic waste as other countries and regions.

Figure 8 | Solving a problem requiring multimodal chart understanding. The model has to read the text, understand the connections between different data points and reason over them to recommend an interesting point and follow the instructions to generate a markdown table (shown correctly rendered).

Source: Our World In Data (Ritchie et al., 2023).

9.3.2. Multimodal Question Answering

Prompt

Do you know what it this plant? How do I best take care of it?

Model Response

This is a Persian shield plant. It is a tropical plant that is native to Southeast Asia. It is a popular houseplant because it is easy to care for and has beautiful purple leaves.

Persian shield plants prefer bright, indirect light. They can tolerate some direct sunlight, but too much direct sunlight can scorch their leaves. They also prefer warm temperatures and high humidity.

Persian shield plants need to be watered regularly, but they should not be allowed to sit in water. The soil should be kept moist but not soggy. They also benefit from being misted regularly.

Persian shield plants can be fertilized every two weeks during the growing season. A balanced fertilizer is a good choice.

Persian shield plants are susceptible to pests, such as mealybugs and spider mites. These pests can be controlled with insecticidal soap or neem oil.

Persian shield plants can be propagated by stem cuttings. The cuttings should be taken in the spring or summer and should be rooted in water or a moist potting mix.

Figure 9 | Answering a multimodal information-seeking query. The model is able to recognize the specific plant shown in the image and provide information about it. The model shows robustness to typos as it is able to understand the user question despite them.

Source: photo taken by an author from the Gemini team.

9.3.3. Interleaved Image And Text Generation

Prompt

Please create a blog post about a trip to New York, where a dog and his owner had lots of fun. Include a few pictures of the dog posing happily at different landmarks.

Model Response

Hi, I'm Cooper. I'm a 2 year old Goldendoodle who loves to travel. Last month, my family and I went to New York City. It was my first time in the Big Apple, and I had a blast!

We stayed in a hotel near Times Square. It was so much fun to see all the bright lights and people. We went to see a Broadway show, and I even got to meet some of the actors.

One of my favorite parts of New York City was Central Park. It was so big and beautiful. I loved running around and playing fetch. We also went to the Statue of Liberty and the Empire State Building. I was so excited to see all the famous landmarks.

I had so much fun in New York City. It's definitely one of my favorite places to travel. I can't wait to go back someday!

Figure 10 | Generating interleaved text and images. The model is able to follow the instructions of generating a blog post with images closely related to the text and with dog images showing high levels of consistency across all images.

9.3.4. Image Understanding And Reasoning

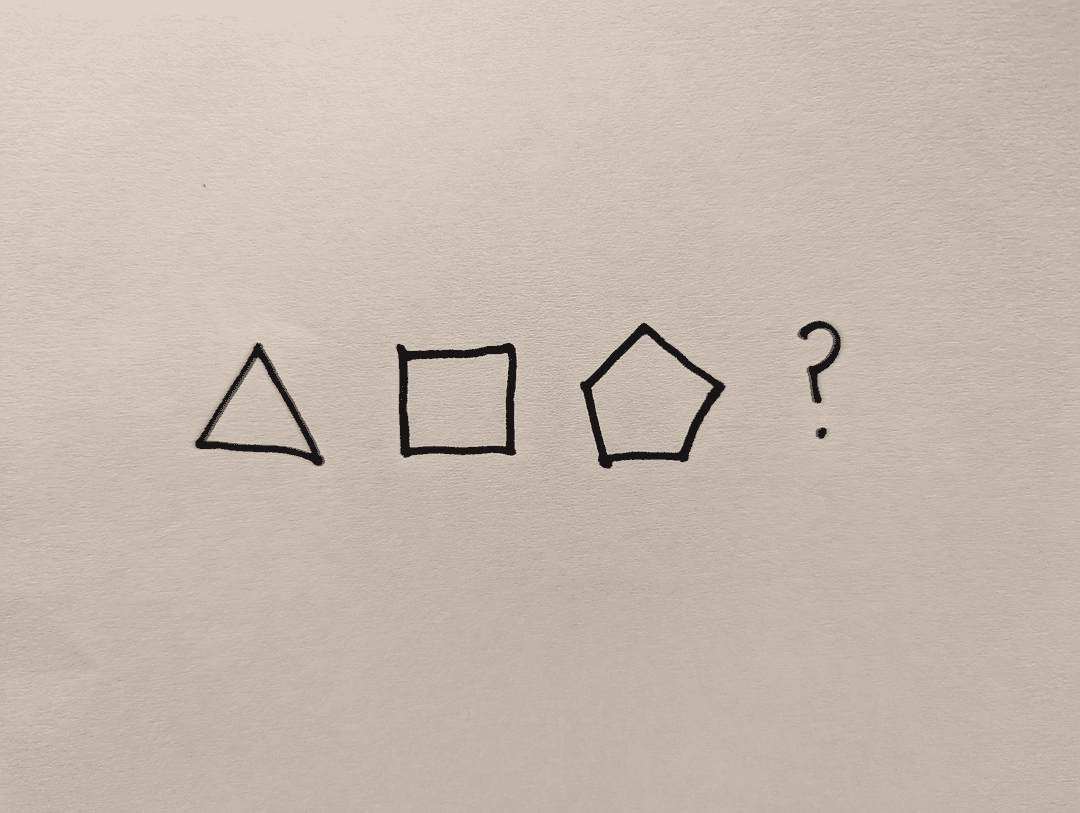

Prompt

Look at this sequence of three shapes. What shape should come as the fourth shape? Explain your reasoning with detailed descriptions of the first shapes.

Model Response

The fourth shape should be a hexagon. The first shape is a triangle, the second shape is a square, and the third shape is a pentagon. The number of sides in each shape is increasing by one

Figure 11 | Solving a multimodal reasoning problem. The model is able to recognize shapes in the image, understand their properties and reason about the relationship between them to predict the next object.

Source: photo taken by an author from the Gemini team.

9.3.5. Geometrical Reasoning

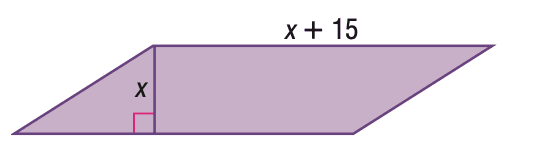

Prompt

Find the height of the parallelogram given its area with 100 square units.

Model Response

The area of the parallelogram is equal to the product of the base and the height. Hence 100 = (𝑥 + 15)𝑥. We get 𝑥² + 15𝑥 − 100 = 0. The roots of this equation are 𝑥₁ = 5 and 𝑥₂ = −20. The second root is negative and is not acceptable. Final Answer: The final answer is 5. I hope it is correct.

Figure 12 | Solving a geometrical reasoning task. The model shows good understanding of the task and is able to provide meaningful reasoning steps despite slightly unclear instructions.

Source: Lu et al. (2021).

9.3.6. Information Seeking About Objects

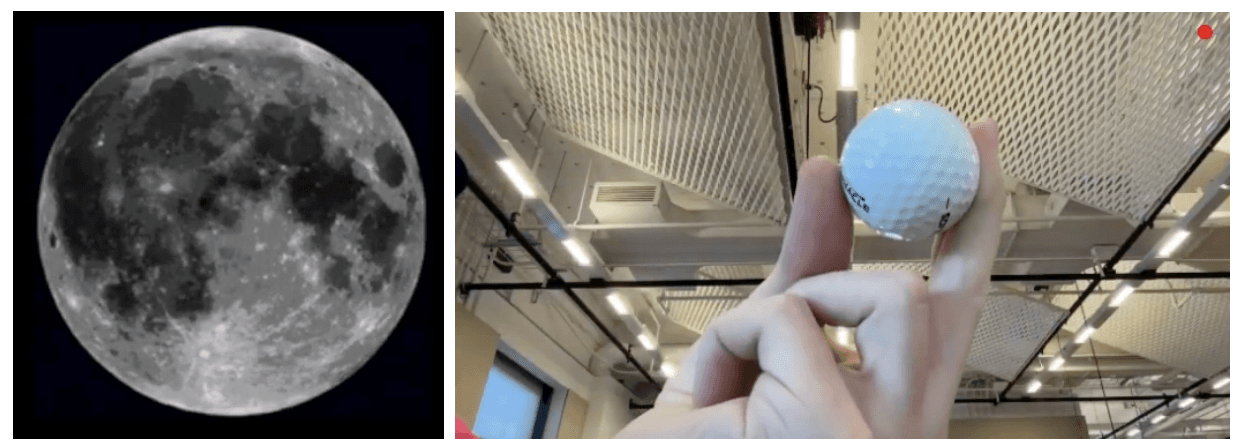

Prompt

Find a connection between these. Hint: think about historical events.

Model Response

The Moon is the only celestial body in which humans have played golf. In 1971, the Apollo 14 crew hit two golf balls on the lunar surface.

Figure 13 | Solving a puzzle using multimodal inputs. The model recognizes the objects in the images and identifies a commonality that connects the two objects.

Source: photo taken by an author from the Gemini team.

9.3.7. Multimodal Reasoning Based On Visual Cues

Prompt

Do you know the precise location where this image was taken?

Model Response

The street in the foreground is 8th Avenue. The cross street is West 34th Street.

Figure 14 | Identifying the objects in the image (the Empire State Building) and recognizing what those are even with small levels of visual distortion in the image. Based on the image, the model is also able to correctly identify the precise location of the person taking the photo.

Source: photo taken by an author from the Gemini team.

9.3.8. Multimodal Humor Understanding

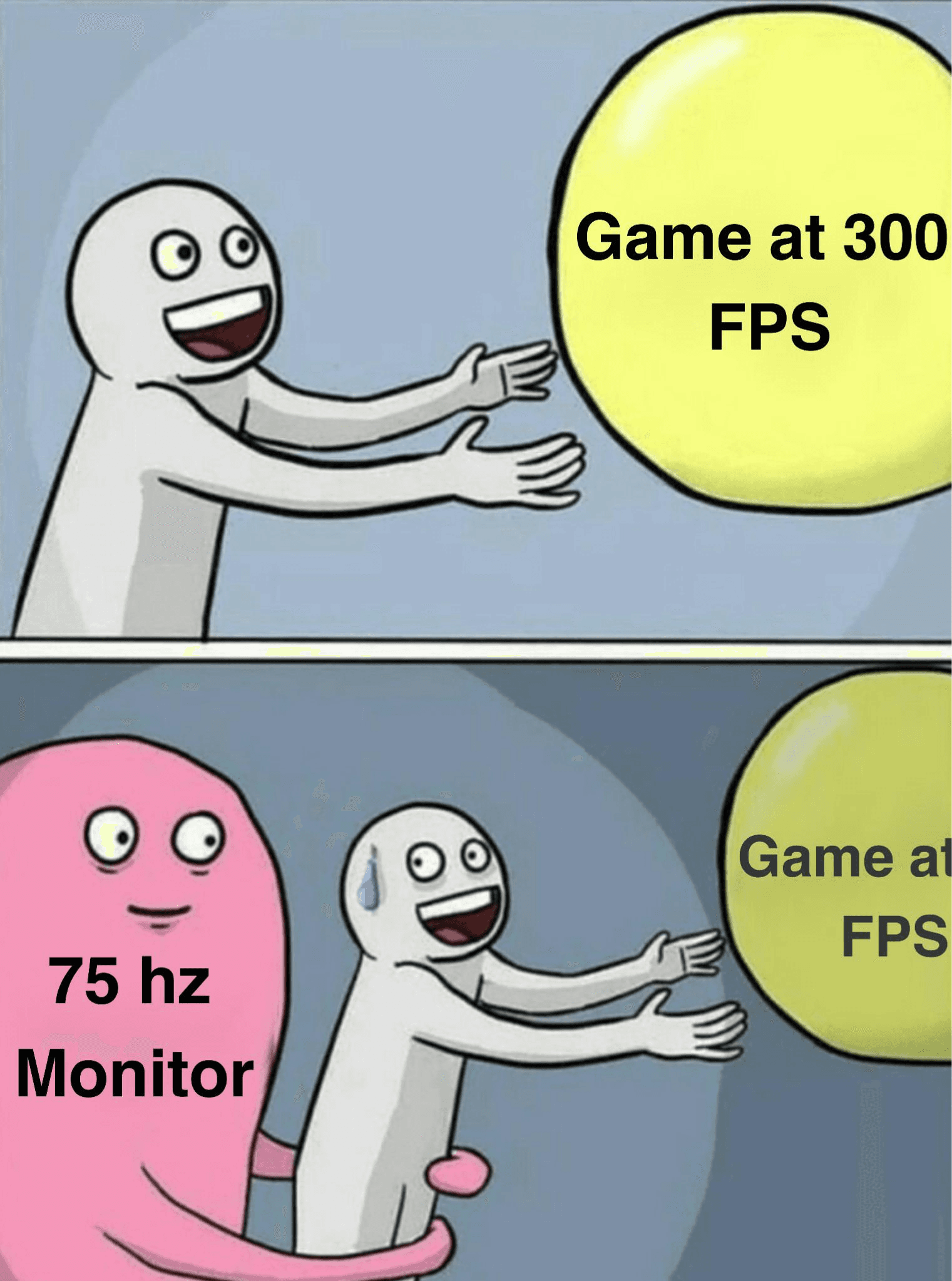

Prompt

Can you explain this meme?

Model Response

The meme is about a person who is playing a game at 300 FPS, but their monitor is only 75Hz. The person is trying to catch the game, but it is too fast for their monitor to display. The meme is funny because it is relatable to anyone who has ever played a game on a monitor that is not fast enough.

Figure 15 | Explanation of humor in a meme. The model is showing the ability to not only describe what is happening in the image but also what it means even though the cultural context is not mentioned explicitly in the image or the prompt.

Source: Hwang and Shwartz (2023).

9.4. Commonsense Reasoning In A Multilingual Setting

Prompt

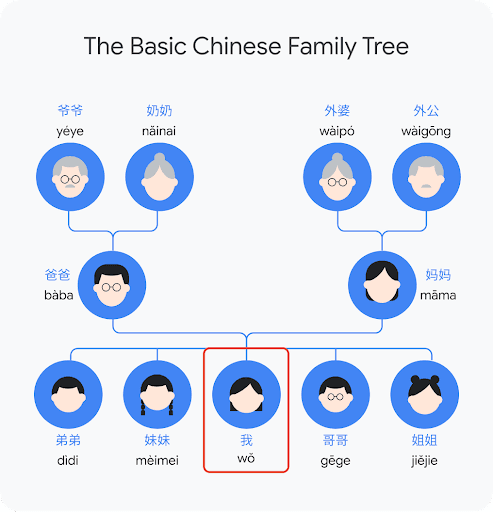

Explain what i should call my grandparents on each side of my family based on this chart

Model Response

On your father's side, you would call your grandparents Yéye and Nˇainai. On your mother's side, you would call your grandparents Wàig¯ong and Wàipó.

Figure 16 | Common-sense reasoning in images. The model is able to understand the relationships represented in the graphs and reason about them in a multilingual setting.

Source: image created by an author from the Gemini team.

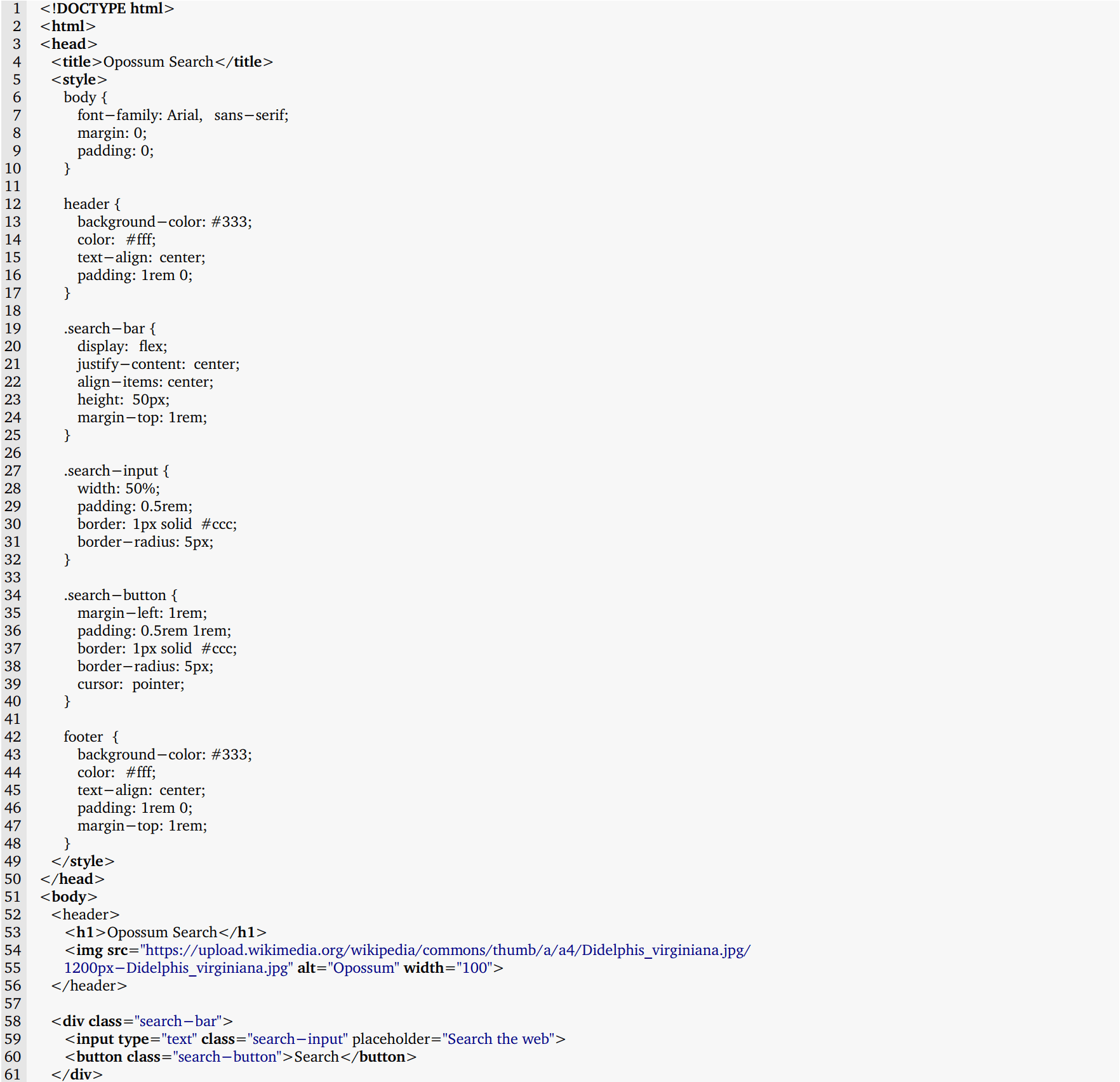

9.4.1. Reasoning And Code Generation

Prompt

Create a web app called "Opossum Search":

- Every time you make a search query, it should redirect you to a google search with the same query, but the word opossum before it.

- It should be visually similar to Google search,

- Instead of the google logo, it should have a picture of an opossum from the internet.

- It should be a single html file, no separate js or css files.

- It should say "Powered by google search" in the footer.

Model Response (Rendered Code)

Here Is The Code For Opossum Search:

This code creates a simple web app that looks similar to Google Search, but with an opossum logo. When you enter a search query and click the "Search" button, it will redirect you to a Google search with the word "opossum" added to the beginning of your query. The app is powered by Google Search, as indicated in the footer.

**Rendered Website **

Figure 17 | Writing code for a website based on user request. The model follows the instructions and requirements defined by the user and converts them to functioning code.

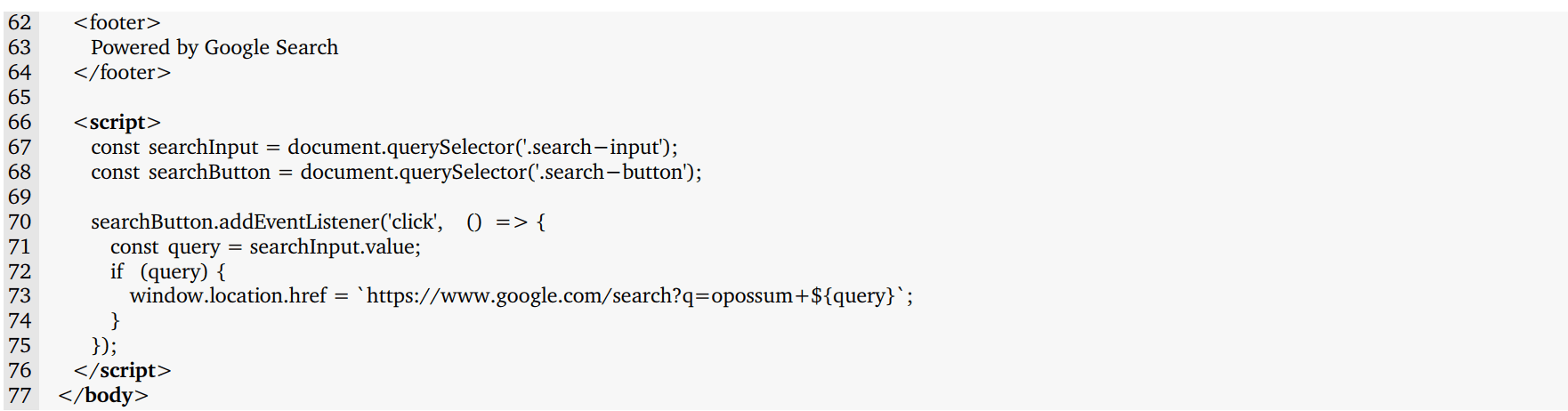

9.4.2. Mathematics: Calculus

Prompt

Find the derivative of sinh 𝑥 + cosh 𝑦 = 𝑥 + 𝑦.

Model Response (Rendered Latex)

Figure 18 | Solving a calculus problem. The model is able to get a solution to a calculus problem with step-by-step explanation and correctly defined LaTeX equations.

Source: question is provided by Macmillan Learning.

9.5. Multi-Step Reasoning And Mathematics

Prompt

In the year 2018 factory A produced goods worth 500 million forints, while factory B produced goods worth 400 million forints. According to their long-term plans, factory A will increase production by 5% each year, while factory B will increase production by 6% per year.

The above plans were published on an economy website. A debate started in the comment section of the article: one user claimed that the difference between the volumes of production between the two factories would get smaller and smaller in the next couple of years.

Calculate the missing values of the table below and show that the above claim is not true.

Header: | 2018 | 2019 | 2020 | 2021 |

Production volume of factory A (mln) | 500 | | | |

Production volume of factory B (mln) | 400 | | | |

Model Response (Rendered Markdown)

| Year | Production volume of factory A (mln) | Production volume of factory B (mln) | Difference (mln) |

|---|---|---|---|

| 2018 | 500 | 400 | 100 |

| 2019 | 525 | 424 | 101 |

| 2020 | 551.25 | 449.44 | 101.81 |

| 2021 | 578.81 | 476.41 | 102.4 |